AI Terms Every Adult Should Know (Plain Language Guide for Adults 45+)

By Rado

AI terms can feel like walking into a workshop with unfamiliar tools. You do not need to master every gadget. You just need the right names so you can ask better questions, spot risks, and get useful help. In this guide, you will learn the core terms in plain English, see quick examples from everyday life, and use simple safety checks you can apply today.

What do people mean by “AI,” and how is it different from machine learning?

You open your bank app and see a small alert about a suspicious charge. A minute later, your maps app suggests a quicker route around traffic. That quiet help is what most people call AI. It feels like the app is thinking along with you.

So what is AI, really? In plain language, AI is when computers perform tasks that normally need human thinking. Seeing patterns. Understanding words. Making simple decisions. It is the big umbrella idea.

Under that umbrella sits machine learning. This is the method many AI systems use to get good at tasks. Instead of being hand‑programmed for every situation, the system looks at many examples and learns patterns. The more relevant examples it sees, the better its guesses become.

You might be wondering, how is deep learning different again? Think of it as a powerful kind of machine learning that stacks many layers of tiny decision makers. Each layer notices a little more detail. One layer spots that there is a shape. The next layer notices edges. Later layers see a face or a fraud pattern. That is why photo apps can group your family pictures, and why email can filter spam so well.

Here is a simple way to separate the terms:

AI is the goal. Make software that can act a bit like a smart helper.

Machine learning is a way to reach that goal. Learn patterns from data.

Deep learning is one strong approach inside machine learning. Many layers. Lots of examples.

Why should you care about the difference? Because it helps you ask better questions. If a tool says it uses AI, you can ask, what data was it trained on? Does it keep my information? Can I review or delete it? If it uses machine learning, you can ask, does it keep learning after I start using it, or is it fixed? Those questions lead to safer choices.

It is normal to feel unsure at first. These words get thrown around in marketing. So here is a grounding example. A rule‑based program is not learning. It follows a script. A machine learning system is learning from data. It updates its inner settings to get better at a task. Deep learning uses many stacked layers to learn complex patterns, like spotting a voice match or reading handwriting.

What do you get from all this in daily life? More helpful apps, when used wisely. Better spellcheck. Smoother photo search. Faster fraud alerts. Also a need for guardrails. Strong passwords. Two‑factor authentication. And a habit of checking app settings for privacy options.

You might ask, where does this go next? In the next section, we will look at neural networks and deep learning in plain language so you can see how those “layers” work without any math.

The takeaway: AI is a big idea. Machine learning is how many AIs learn. Deep learning is one kind of machine learning that handles complex patterns. Knowing the difference helps you choose tools and ask the right safety questions.

What are neural networks and deep learning—in plain language?

Imagine you are sorting socks after laundry. First you check color. Then size. Then the tiny pattern around the cuff. Step by step, your brain turns a messy pile into neat pairs. A neural network works in a similar layered way. It takes in raw data, runs it through a series of simple checks, and ends with a useful decision.

So, what is a neural network? Think of many small decision makers working together. Each one answers a simple question like “Is there a curve here?” or “Does this sound like the letter A?” A single check is not very smart. But hundreds or thousands of them, stacked in layers, can spot complex patterns such as a face in a photo or a strange payment on your card.

Deep learning refers to neural networks with many layers. The word “deep” points to the number of layers, not to anything mysterious. Why does depth help? Early layers notice simple features such as edges and corners. Middle layers combine those into shapes. Later layers recognize complete things like cats, invoices, or voice tones. More layers can capture more subtle details, as long as the model has enough good examples to learn from.

You might be wondering, do I need to know math? Not at all. A kitchen analogy is enough. Each layer is like a station on a prep line. One station rinses. Another chops. Another seasons. By the end, you have a finished dish. In a neural network, each layer prepares the data a bit more until the system can make a call such as “spam” or “not spam.”

Here is where you meet deep learning in daily life. Phone cameras that brighten a dim room. Email filters that keep junk out. Apps that transcribe voicemail into text. Streaming services that suggest a documentary you will likely enjoy. The better the training data and the clearer the goal, the more helpful the results.

It is normal to feel cautious. These systems can still be wrong. A camera may blur a photo. A filter may hide a message you need. So, what can you do? Keep human judgment in the loop. Check important results. Enable account alerts. Review settings that control how your data is used for training.

Two quick distinctions can help you talk with confidence:

Machine learning is the broad idea of learning patterns from data.

Deep learning is a powerful approach within machine learning that uses many layered checks to learn complex patterns.

The takeaway: A neural network is a team of simple decision makers arranged in layers. Deep learning adds many layers so the system can recognize complex patterns. You do not need the math to use the benefits. Keep an eye on settings and always double check important outcomes.

What is generative AI and a large language model (LLM)?

You type a rough note that says, “Trip to Prague, 3 days, art, good coffee, one museum.” In seconds the app turns it into a tidy plan with morning ideas, a lunch spot, and a walking route. That is generative AI at work. It creates new content from your instructions.

So what counts as generative AI? Any tool that can produce text, images, audio, or video from a prompt. Write a polite refund email. Create a packing list. Draft a birthday poem. Make a simple logo. These are common, everyday uses you might try this week.

Now the term you hear often. Large language model, or LLM. In plain language, an LLM is a program that has read a lot of text and learned how words flow together. It tries to predict the next likely word based on the words you already wrote.

Because it has seen many examples, it can draft, summarize, translate, and explain quickly.

You might be wondering, is it thinking like a person? No. It is very good at pattern prediction. That can feel like understanding, yet it sometimes makes confident mistakes. This is why clear prompts and quick checks matter.

Here is a simple prompt formula you can reuse:

Goal: what you want. “Write a two paragraph email asking for a refund.”

Context: who and what. “I bought a travel bag last week. The zipper broke.”

Format: how to present it. “Keep it friendly, include order number, end with a thank you.”

Want better results? Add a short example. Or ask the model to list options before it writes the final version. Small steps reduce errors and give you more control.

Where might you use this in daily life? Cleaning up notes after a doctor visit. Turning bullet points into a clear message for your family chat. Creating a recipe from what is already in your fridge. Summarizing a long article into five key points. These are the low stress places to start.

What about risks? It is normal to feel cautious. Generative tools can produce made up facts, incorrect numbers, or fake quotes. They can also mirror biases found in the data they were trained on. So build in a simple safety habit. Ask for sources when it matters. Paste in the source text and ask the model to quote only from it. Check sensitive facts with a trusted website. When an answer sounds too neat or too specific, pause and verify.

Privacy also matters. Review the app’s data settings. Does it save your chats to improve the model? Can you opt out? Avoid pasting private IDs, passwords, or full medical records into any tool unless you are sure about its protections.

You might ask, what settings should I try first. If your tool has a creativity slider, start in the middle for everyday tasks. Go lower for legal text, instructions, or numbers. Go higher for brainstorming names or taglines.

Next we will look at prompts in more detail so you can get repeatable results and avoid the most common tripwires.

The takeaway: Generative AI creates new content from your instructions. An LLM predicts likely next words to draft, summarize, and explain. Use clear prompts, ask for sources when it matters, and keep private details out of your chats unless you trust the tool’s protections.

What is a “prompt,” and how do you write one that works?

Think of a prompt as the instructions you give a helpful assistant. Clear in. Useful out. Vague in. Messy out. You do not need special wording. You need a simple recipe you can repeat.

Here is a three step formula that covers most tasks:

Goal. Say exactly what you want. Summarize, rewrite, list steps, draft an email, or create options.

Context. Who is it for and what do they need? Add any facts, tone, limits, or examples.

Format. Tell the tool how to present the result. A short email. Five bullets. A table with three columns.

You might be wondering, do I have to sound formal. Not at all. Speak like you normally would. The key is to be specific. If you want an email, say the tone, the length, and the one thing you must include.

Let us try a few ready to use prompts you can copy:

Refund email: "Write a friendly two paragraph email to customer support. Product: travel bag. Bought last week. The zipper broke on day two. Include order number 58423 and ask for a refund or replacement. End with a thank you."

Trip plan: "Create a three day plan for Prague for two adults who like art and coffee. Low cost options. Walkable routes. List morning, lunch, and afternoon ideas for each day."

Recipe help: "I have eggs, tomatoes, spinach, and feta. Suggest three quick dinner ideas. 20 minutes max. Grocery list at the end."

Want more control. Use a short example before the task. That is called a few shot prompting. Show the model the style you want, then ask it to continue. You can also ask for options first, then pick one to expand.

Common tripwires to avoid:

Asking for everything at once. Break big tasks into steps. Outline first. Draft next. Edit last.

Vague goals. Say who it is for and what success looks like.

Missing constraints. Set limits for length, tone, and what to include or avoid.

No review. Ask the tool to double check numbers and to quote only from given text.

Here is a simple template you can save:

"You are [role]. Task: [what to do]. Audience: [who]. Constraints: [tone, length, must include or avoid]. Inputs: [facts, bullets, pasted text]. Output format: [email, bullets, table]."

It is normal to feel unsure at first. Prompts get better with practice. Try a small task you do every week. Cleaning up notes. Turning a voice memo into a short plan. Editing a message to sound clear and polite. Did it help? What would make it safer or faster next time?

Before we move on, ask two quick questions. Did I give the tool enough context to avoid guessing. Did I ask for the format I want to copy and paste?

Next we will look at tokens, parameters, and context window. These terms will help you understand length limits and why the tool sometimes forgets earlier parts of a long chat.

The takeaway: A prompt is your instruction. Use Goal, Context, and Format. Start small, add one example when needed, and break big tasks into steps for cleaner results.

Tokens, parameters, and context window—do these matter to you?

Let’s make this simple. Think of an AI chat like a table conversation with limited space for notes. You can talk for a while, but not forever. Three terms explain why the tool sometimes forgets earlier parts or asks you to shorten things: tokens, parameters, and context window.

Start with tokens. A token is a tiny chunk of text. Often a few letters or part of a word. Tools measure length in tokens, not characters. Why should you care? Because both your message and the AI’s reply spend tokens. If you paste a very long document, you might hit the limit and the tool will skip or trim something.

Now parameters. You may hear that a model has many billions of parameters. What are these? They are the internal settings the model learned during training. You can think of parameters as the model’s memory of how language works. More parameters can help with nuance, but they also need careful training and strong safeguards. You do not manage parameters yourself. You simply choose a model that fits your task.

Next, the context window. This is the capacity of what the model can keep in mind at once. Imagine a whiteboard that can only hold so much text before you have to erase. If your chat grows very long, or if you paste large documents, earlier details can fall off that whiteboard. That is why the model may repeat questions or lose track of a name.

You might be wondering, how do I work within these limits? A few habits help.

Break big tasks into steps. Ask for an outline first. Then expand one section at a time.

Use summaries. If the chat is long, ask for a short recap you can refer back to.

Point back to the source. Paste the key paragraph and say, “quote only from this.”

Use files wisely. Instead of pasting a whole report, paste the parts you need.

Save important facts. Keep a small cheat sheet of names, dates, and numbers, and paste it at the start of a new turn.

Another question you might have. Why does pricing mention tokens? Many tools charge by the number of tokens you send and receive. Shorter, clearer prompts not only perform better, they can also cost less.

What about model choices? If you see an option with a larger context window, pick it for long documents, transcripts, or complex projects. If you only need a short email or a few bullet points, a smaller model is often enough.

It is normal to feel uncertain about these limits. You do not need to count tokens by hand. Just watch for signs. The tool stops mid sentence. It asks you to shorten a paste. It forgets a detail you just gave. When that happens, split the task and keep going.

Before we move on, try one quick exercise. Take a long message you wrote. Trim filler words. Label each paragraph with a short heading. Add a clear request at the end. Did the response get sharper?

Next, we will look at training data, fine tuning, and retrieval. These explain how a model learns, how it can adapt, and how it can use your own documents during a chat.

The takeaway: Tokens measure length. Parameters are the model’s learned settings. The context window is how much the model can hold in mind at once. Work in steps, use summaries, and pick the right model size for the job.

What is “training data,” “fine‑tuning,” and “retrieval,” and why should you care?

Imagine you are teaching a new helper to sort your mail. First, you show many examples of bills, letters, and ads. That pile of examples is the training data. Next, you give a quick lesson on your household rules: “Anything from the clinic goes in the urgent tray.” That is like fine‑tuning. Finally, when the helper gets stuck, you let them open your folder to look up a specific policy before deciding. That is retrieval.

Start with training data. Every AI model learns from examples. Articles, books, help pages, public websites, sometimes licensed datasets. The quality and freshness of that data shape what the model can do. If the data is outdated or noisy, answers can be off. If the data is balanced and recent, results tend to be clearer.

So, why should you care? Because knowing the source helps you judge trust. You might be wondering, was this trained on public web pages, on private customer records, or on a curated set with permissions? It is a fair question. Reputable tools tell you in their docs or safety pages. If the answer is vague, keep your guard up.

Now fine‑tuning. Think of it as teaching the model your house style. A clinic might fine‑tune a model to answer patient FAQs in a calm, compliant tone. A company might fine‑tune for its product names and refund steps. Fine‑tuning uses additional examples to adapt the base model without retraining it from scratch. You get more consistent answers in your voice.

When should you use fine‑tuning. If you need stable phrasing, domain‑specific terms, or responses that must follow set policies. If your needs change often, you might prefer retrieval instead.

That brings us to retrieval. Retrieval means the model looks up information from a trusted source at answer time. You point the tool to your PDFs, web pages, or notes. The model searches those documents and forms its reply from what it finds. You keep the source of truth in your control. You can update a file and the next answer reflects the change.

You might ask, which one is safer. Retrieval usually wins for current, factual answers because it can cite the exact page. Fine‑tuning is great for tone and structure but can bake in old facts if you do not refresh it. For many small teams, a simple retrieval setup (sometimes called RAG) plus a style guide prompt is the sweet spot.

Privacy questions are normal. Before you share documents, check where files are stored, how long they are kept, and who can access them. Can you delete them? Can you turn off training on your data? Look for clear toggles in settings and a short retention policy.

Practical tip to try this week. Pick one process you repeat, such as answering customer emails. Create a small folder with your policy PDF and a few good examples. Ask your AI tool to answer only using those files, and to quote or link the source. Does accuracy improve? Do you feel more in control?

The takeaway: Training data teaches the base model. Fine‑tuning adapts it to your style or niche. Retrieval lets the model use your current documents at answer time. Start with retrieval of facts, add fine‑tuning when you need a steady voice.

What are embeddings and a vector database—why does search feel smarter now?

Think about how you search for a memory. You might say, “That trip with the red umbrella photo near the old bridge.” You did not remember the file name. You remembered the meaning. Embeddings help computers do the same.

Start with embeddings. An embedding is a numeric fingerprint that captures meaning. Instead of storing the exact words, the system turns a sentence, image, or even a short audio clip into a list of numbers that reflect its gist. Two things with similar meaning have fingerprints that sit close together in this number space.

So what is a vector database? It is a special kind of storage designed to handle those numeric fingerprints. When you search, the system looks for nearby fingerprints rather than exact word matches. That is why you can find “budget‑friendly coffee in Prague” even if the file only says “cheap café near Old Town.” The meaning matches, even if the wording does not.

Where do you see this in daily life? Smarter search bars that find the right note when you barely remember the title. Photo apps that surface “hiking” pictures even if you never tagged them. Help centers that answer “how do I get a refund” when the article is titled “return policy.”

You might be wondering, is this safe for private files? It can be, with the right setup. Good tools encrypt data, isolate your index from others, and let you delete entries. Always review how your data is stored and who can access it. If a tool offers a “do not use my data to train” toggle, consider using it.

A few practical tips:

Organize your key documents (PDFs, FAQs, policies) into one folder. Consistent titles help.

Add short summaries at the top of long files. Better summaries lead to better fingerprints.

When you ask a question, include a few keywords you expect to appear in the right document. This narrows the search.

For recurring tasks, build a small “knowledge pack” with your most used files and keep it updated.

Curious how this pairs with retrieval. Embeddings power the “find relevant passages” step. The model then reads those passages to craft the answer. That is why people often say “RAG” (retrieval‑augmented generation). It is just search plus reading, wired together.

It is normal to feel a bit abstract about numbers in space. The key is the benefit you feel: finding the right thing faster, even when you cannot remember the exact words.

The takeaway: Embeddings are numeric fingerprints of meaning. A vector database stores those fingerprints and finds nearby matches. Together they make search feel smart and forgiving—great for notes, help centers, and Q&A over your own files.

What does “multimodal” mean?

Imagine asking your phone, “What is this plant?” while pointing the camera at a leaf. Or snapping a photo of a parking sign and saying, “Read this to me and tell me when I need to move the car.” When a tool understands images and text together—and sometimes audio and video too—that is multimodal.

In plain language, multimodal means an AI can work with more than one kind of input or output. Text plus images. Text plus audio. In some tools, all four: text, image, audio, and video. Why does this matter? Because life is not just words. Receipts are pictures. Meetings are speeches. Travel plans are maps and lists.

Here are a few everyday uses:

Translate a menu by taking a photo and asking for the English names of the dishes.

Add a photo of your pantry and ask for three dinner ideas that use what you have.

Take a picture of a device error message and ask for steps to fix it.

Record a short voice note and ask for a clean, polite message you can send.

You might be wondering, does it work well with images? It is improving fast, but it is not perfect. A model may misread a blurry sign or miss small print. When details matter—medicine labels, legal documents, financial forms—double check with a human or the original source.

Accessibility is a major benefit. Tools that can describe pictures, read text aloud, or summarize a long recording help more people participate. If you or a family member has low vision, hearing changes, or prefers listening to reading, multimodal features can make a real difference.

Privacy deserves attention too. Photos and recordings often include sensitive details: faces, addresses, license plates, medical info. Review the app’s settings. Can you opt out of saving images? Can you delete uploads? Avoid sharing private IDs or paperwork unless you trust the protections.

Practical tips to get good results:

Take clear photos with good lighting. Crop to the important part when possible.

Add short text context with your image. “This is my Wi‑Fi router label; help me find the model number.”

For audio, record in a quiet room and speak at a steady pace. Then ask for a summary or action list.

If the outcome matters, ask the tool to restate the key details back to you before you act.

Curious what’s next. Multimodal agents that can see your screen (with permission), click buttons, and fill in simple forms are on the horizon in many apps. Start small with safe tasks and grow from there.

The takeaway: Multimodal AI can use and produce text, images, and audio (sometimes video). It shines for translation, explanations, and everyday fixes. Keep privacy in mind, give clear context, and double check important details.

What is an AI “agent,” and when is it useful?

Think of an agent as an assistant that can follow steps, use tools, and report back. Instead of only answering in text, it can check your calendar, draft an email, look up a flight, or fill a form—if you allow it.

In plain language, an agent is an AI system that can take actions. It plans a small sequence of tasks, calls other apps through safe connections, and returns results for your review. The key is permission and visibility. You stay in control.

Everyday places you might use an agent:

Inbox cleanup: label newsletters, draft replies, and schedule a follow‑up.

Travel admin: find flights under a budget, hold an option, and add your itinerary to the calendar.

Paperwork: pull key fields from a PDF and paste them into an online form you approve.

Research: gather three recent articles on a topic and summarize the main differences.

You might be wondering, is this safe? It can be, with guardrails. Start with least privilege: give access only to the specific folders, inbox labels, or calendars needed. Turn on activity logs. Require your approval before any message is sent or any purchase is made.

Good guardrails to look for:

Clear permission prompts before first use.

A visible log of actions taken and data accessed.

Easy on/off switches and scoped access (this folder, not the whole drive).

Limits on spending and messages sent per day.

A practical way to start. Pick one low‑risk task you are tired of doing. For example, filing receipts. Set the agent to move any email with “receipt” in the subject into a folder and extract the total into a spreadsheet. Run it for a week with daily checks. If it behaves well, expand to the next task.

It is normal to feel cautious. Agents are powerful. The goal is to save time without creating new risks. Keep private data out of scope when possible. Review the privacy policy. Ask what data is stored, for how long, and whether it is used to train the model.

The takeaway: An AI agent can take actions in your apps with your permission. Use clear approvals, narrow access, and action logs. Begin with one safe, well‑defined task and grow from there.

What is a “hallucination,” and how can you reduce it?

You ask for a quick summary of a product you love. The AI replies with a confident sentence that includes a feature that never existed. That confident wrong answer is called a hallucination.

Why does this happen? Most language models are prediction engines. They guess the next likely word based on patterns in their training data and your prompt. When the model lacks the right facts (or your question is vague) it can fill the gap with something that sounds right but is false.

You might be wondering, can I prevent this. You cannot remove the risk, but you can reduce it.

Practical ways to cut hallucinations:

Provide the source. Paste the paragraph or link to the exact page and say, “quote only from this.”

Ask for citations. “List sources for each claim.” Then click through and check.

Narrow the task. Ask for steps, definitions, or comparisons rather than open‑ended essays.

Use retrieval. Point the tool at your PDFs or help pages and require it to answer from there.

Force a double check. “Before you answer, list what data you used and what you are unsure about.”

Compare versions. Ask for three short options or ask the tool to critique its own answer.

Red flags to watch for:

Precise numbers with no source.

Quotes that do not appear in any linked document.

Confident tone on brand new events or niche topics.

Perfectly formatted references that do not resolve to real pages.

A simple routine you can adopt:

For anything important, verify with an independent source you trust.

Keep sensitive actions (money transfers, medical changes) out of AI tools.

When speed matters, ask for a short list of key facts with links you can quickly scan.

It is normal to feel frustrated when a tool sounds sure but is wrong. Add guardrails that fit the task. For drafts and brainstorming, accept small errors and edit. For facts and decisions, demand sources and verify.

The takeaway: A hallucination is a confident but false answer. Reduce it by supplying sources, narrowing the task, using retrieval, and verifying anything important before you act.

The safety basics: privacy, security, and “responsible AI” terms to know

Picture a quick kitchen‑table check before using any new app. You glance at a few settings, flip two switches, and feel calmer because you know what is being saved and what is not. That is the mindset for safe, confident AI use.

Let’s translate the key terms into plain English and daily actions.

Data retention. This explains how long the app keeps your chats and files. Shorter is safer. Look for a setting that lets you turn off saving your conversations or set an auto‑delete period.

Model or training settings. Some tools use your chats to improve their systems. Others do not. Find the toggle that says “Use my data to train.” Turning it off usually keeps your content out of future training.

Export and delete. Good tools let you download your data and delete it. That way you can move on or wipe what you no longer need.

Audit logs. These are trails of who accessed what and when. In workplaces, logs help catch mistakes or misuse. At home, a simple activity history can be enough to review changes.

Access controls. This means who can see or change things. If the app offers roles (viewer, editor, admin), use the least access that still gets the job done.

Encryption. Think of this as a lock on your data while it is being sent and while it is stored. Most reputable apps use it by default. If the app handles sensitive info, confirm it.

Bias and fairness. AI can mirror patterns from its training data. That may lead to uneven results. When possible, read the vendor’s fairness statement and look for examples of testing across different groups.

Content authenticity. You may see terms like watermarking, provenance, or labels on AI‑made media. These aim to mark or trace how an image, audio clip, or video was created so that fakes are easier to spot.

You might be wondering, which settings should I check first? Here is a short starter list:

Turn off “use my data for training,” if offered.

Set chat history to auto‑delete after a period you are comfortable with.

Enable two‑factor authentication for your account login.

Review app permissions on your phone and remove anything unnecessary (camera, mic, location) when not in active use.

Fair question: what about family members and fraud calls? Create a shared “pause and verify” plan. If you get an urgent money request, hang up and call back using a saved number. Consider a simple “safe word” for families to defeat voice‑cloning scams.

It is normal to feel unsure about what is safe to paste into a chat. Use a simple rule: avoid IDs, passwords, full financial or medical records, or anything you would not email without protection. When in doubt, summarize or mask details (e.g., last four digits only).

Two habits that make a big difference:

Update and patch. Keep your apps and devices current. Many security fixes arrive quietly in updates.

Review monthly. Put a 10‑minute reminder on your calendar to review settings, connected apps, and recent activity.

Ready to put this into practice. In the next section, we will focus on deepfakes, voice cloning, and the simple checks that help you and your family stay safe.

The takeaway: Learn the handful of settings that matter: data retention, training toggles, export/delete, two‑factor, and app permissions. Keep your data light, your accounts locked, and your family on the same verification page.

Deepfakes, voice cloning, and scams: how to protect yourself

It starts with a late evening call. A familiar voice says, “Mom, I lost my wallet. Can you send money now?” Your heart races. The story is urgent. The number is unknown. Could it be real? Or could it be a cloned voice.

Deepfakes are realistic but fake media. A face in a video may be swapped. A voice in a call may be synthesized. Scammers use these tools to trigger quick reactions. Fear. Urgency. Secrecy. The good news is you can slow the moment, run two quick checks, and stay safe.

First, pause and verify. Hang up and call back using a saved contact card. Text a second channel to confirm. Ask a simple family “safe word” question that only the real person would know. Real emergencies survive a thirty second delay. Scams depend on speed.

Second, check the media. If it is an image or video, look for odd edges, smooth skin with no pores, earrings that change sides, or lighting that does not match the scene. Do a quick reverse image search to see if the photo already exists online. For audio, listen for robotic phrasing, clipped breaths, or a strange echo that does not match the room.

You might be wondering, what about emails and messages? The same rules help. Inspect the sender address closely. Hover over links before you click. When money or passwords are involved, switch to a known channel and confirm. If a message asks you to bypass normal steps, that is a red flag.

Practical steps that cut risk today:

Turn on account alerts for transfers, new payees, and logins.

Set spending limits and two factor authentication on bank and payment apps.

Save key numbers as contacts and use them for callbacks.

Create one family checklist for urgent requests and pin it in a shared chat.

Teach a simple script: “I will call you back on your saved number now.”

What about online shopping and social posts? Check the profile age, number of posts, and comment quality. Be cautious with deals that seem too good to be true. If a celebrity or brand seems to offer a giveaway, visit their official page in a separate tab and see if it is real.

It is normal to feel uneasy when technology can copy a face or voice. Focus on what you can control. Slow down. Verify on a second channel. Keep your device and apps updated so security patches protect you behind the scenes.

One last thought. Talk about scams when things are calm. Practice the callback routine with family. Add the safe word to a card and keep it simple. Small habits make the urgent moment easier.

The takeaway: Deepfakes and voice cloning try to rush you. Pause, verify on a saved number, use a family safe word, and turn on account alerts. Real emergencies survive a short delay. Scams do not.

Mini‑glossary cheat sheet (quick definitions with one‑line examples)

You do not need to memorize a textbook. Keep this page handy and skim it when a term pops up. Each entry gives you a plain definition and a quick example you can picture.

AI (Artificial Intelligence): Software that performs tasks that usually need human thinking. Example: your maps app rerouting around traffic.

Machine learning: A way for AI to learn patterns from data instead of hard rules. Example: your email app learning what you mark as spam.

Deep learning: Machine learning with many layers that spot complex patterns. Example: a phone camera recognizing a face in low light.

Neural network: A layered set of simple decision makers that work together. Example: recognizing a handwritten number on a package.

Generative AI: Tools that create new text, images, audio, or video from instructions. Example: drafting a polite refund email from your notes.

Large language model (LLM): A system trained on lots of text that predicts likely next words. Example: turning bullet points into a clear summary.

Multimodal: Works with more than one input or output type, like text and images. Example: taking a photo of a menu and getting a translation.

Prompt: The instruction you give an AI tool. Example: “Summarize this article in five bullets for a busy reader.”

System prompt: Background instructions that set the tool’s role and boundaries. Example: “You are a careful travel planner. Ask clarifying questions.”

Few‑shot example: A short sample that shows the style you want before the task. Example: paste one model email, then ask for a similar reply.

Token: A small chunk of text used to measure length. Example: very long pastes may hit the token limit and get trimmed.

Context window: How much the model can keep in mind at once. Example: in a long chat, early details can be forgotten.

Parameters: The internal settings the model learned during training. Example: you do not adjust them; you choose a model that fits the job.

Training data: The examples the model learned from. Example: articles and manuals that teach it general knowledge.

Fine‑tuning: Adapting a model to your style or domain with extra examples. Example: a clinic tuning answers to match patient‑friendly wording.

Retrieval: Letting the model look up your documents at answer time. Example: asking a tool to answer only from your company PDF.

RAG (retrieval‑augmented generation): Retrieval plus generation in one workflow. Example: search your files, then draft an answer with citations.

Embedding: A numeric fingerprint of meaning for text, images, or audio. Example: finding a note even when you forgot its title.

Vector database: Storage that finds nearby embeddings by meaning. Example: searching “cheap café in Old Town” and finding “budget coffee near the square.”

Agent: An AI that can take steps or use tools with permission. Example: adding a flight to your calendar after you approve the choice.

Tool use: When an AI calls a calculator, web search, or calendar to complete a task. Example: checking a date before drafting a reminder.

API: A safe connector that lets apps talk to each other. Example: an agent using your calendar through an API, not by guessing your password.

Hallucination: A confident but false answer. Example: citing a feature that the product never had.

Bias: Skewed results that reflect patterns in training data. Example: a photo tool that works better on one skin tone than another.

Guardrails: Rules and limits that reduce risky outputs or actions. Example: approval required before any email is sent on your behalf.

Watermarking and provenance: Ways to label or trace AI‑made media. *Example: a photo shows a label that it was created by software.

The takeaway: Bookmark this page. When a new term appears, match it to the everyday example, then decide what action or safety check you need next.

To conclude...

If AI terms have felt like a foreign language, you now have the plain words and the simple habits that make them useful.

Start small. Use the Goal, Context, Format prompt for one weekly task, then add a safety check like turning off training on your data and enabling two factor login.

Build one family plan for urgent requests, then review app settings once a month. You stay in charge, the tools support your day, and your confidence grows with each small win.

So, your next step? Want a hand getting started? Download my free AI Quick-Start Bundle. It is a step‑by‑step resource designed for beginners who want to use AI wisely, without the overwhelm.

Frequently Asked Questions

Q1. What is the fastest way to get better results from AI tools?

Use the Goal, Context, Format prompt. Add a short example when you need a specific tone. Break big jobs into steps, outline first, draft second, edit last.

Q2. How do I keep my information private?

Check three settings first: training on my data, chat history retention, and two factor login. Turn training off if offered, set auto delete for history, and avoid pasting IDs or full medical records.

Q3. What should I do when the answer looks confident but feels off?

Ask for sources, then verify with a trusted page. For facts that matter, paste the source text and ask the tool to quote only from it.

Q4. Do I need a paid plan?

Free plans handle short drafts and summaries. Paid plans usually add a larger context window, faster replies, and better file handling. Start free and upgrade only if you hit limits often.

Q5. Can these tools replace my job?

They automate pieces of work, not full roles. Learn to direct them with clear prompts, keep your domain expertise sharp, and you stay valuable.

Q6. How can my family avoid voice cloning scams?

Agree on a pause and verify routine. Hang up and call back on a saved number. Use a simple safe word. Turn on account alerts for transfers and new payees.

Q7. What should I try first this week?

Pick one repeatable task, for example cleaning up notes after a call. Use a clear prompt, ask for five bullets, then save your favorite version as a template.

Sources:

Pew Research Center: How the U.S. Public and AI Experts View Artificial Intelligence (2025).

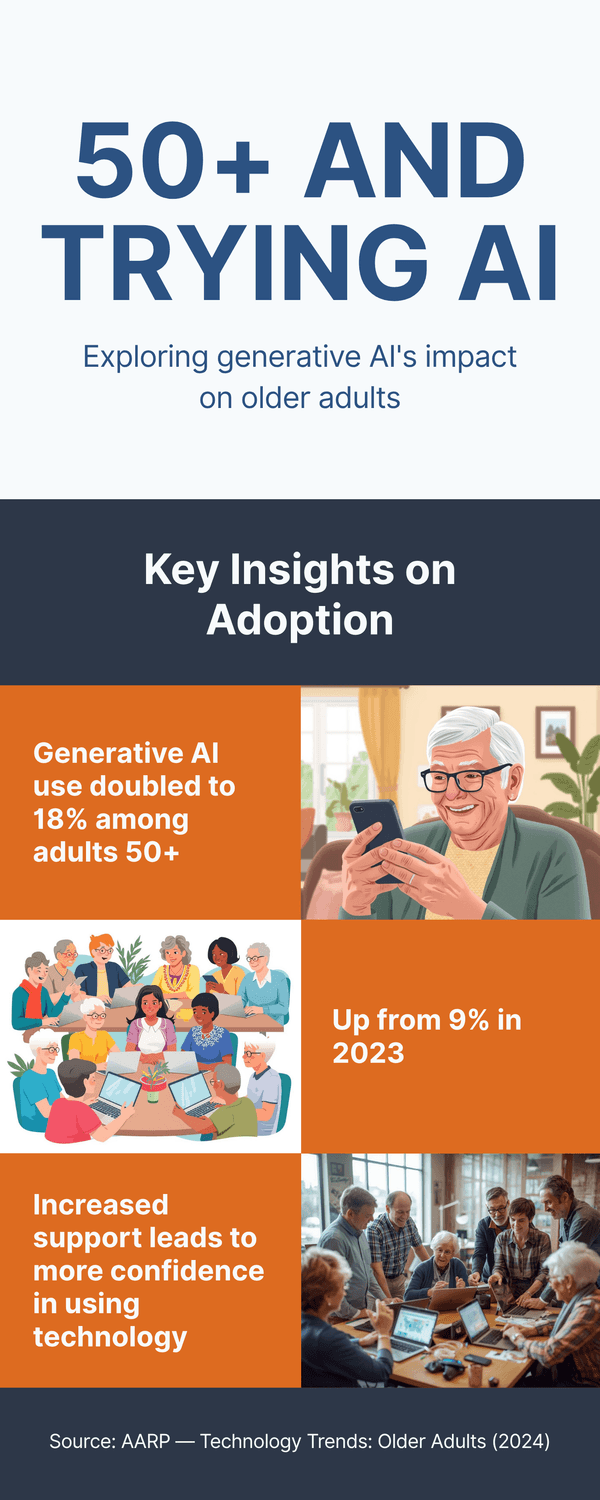

AARP Research: Tech Adoption and AI Concerns Among Adults 50+ (2024).

IBM: What Is AI Transparency? (2025).

U.S. GSA: Key AI Terminology (2025).

Cloudflare: What is a Large Language Model? (2025).

American Banker: Elder abuse, AI deepfakes and TikToks: 2024’s fraud trends (2024).

Gallup:AI Use at Work Has Nearly Doubled in Two Years (2025).