The Difference Between AI, Machine Learning, and Deep Learning: A Simple Guide for Adults 45+

By Rado

Have you ever felt like tech terms blur together (AI, ML, DL) like a jumble of letters only engineers can decode? You’re not alone. Many adults 45+ say these terms sound intimidating and interchangeable. The good news is you don’t need to be a scientist to understand them. In this guide, we’ll break down the three concepts in plain language, give you everyday examples, and show you why it matters for your safety, independence, and peace of mind.

What exactly is Artificial Intelligence (AI)?

You sit down with your morning coffee and your phone already suggests the photos you might want to share, the headlines you usually read, and the route with fewer delays. None of that required you to press a special “AI” button. That quiet help behind the scenes is what most people mean by Artificial Intelligence.

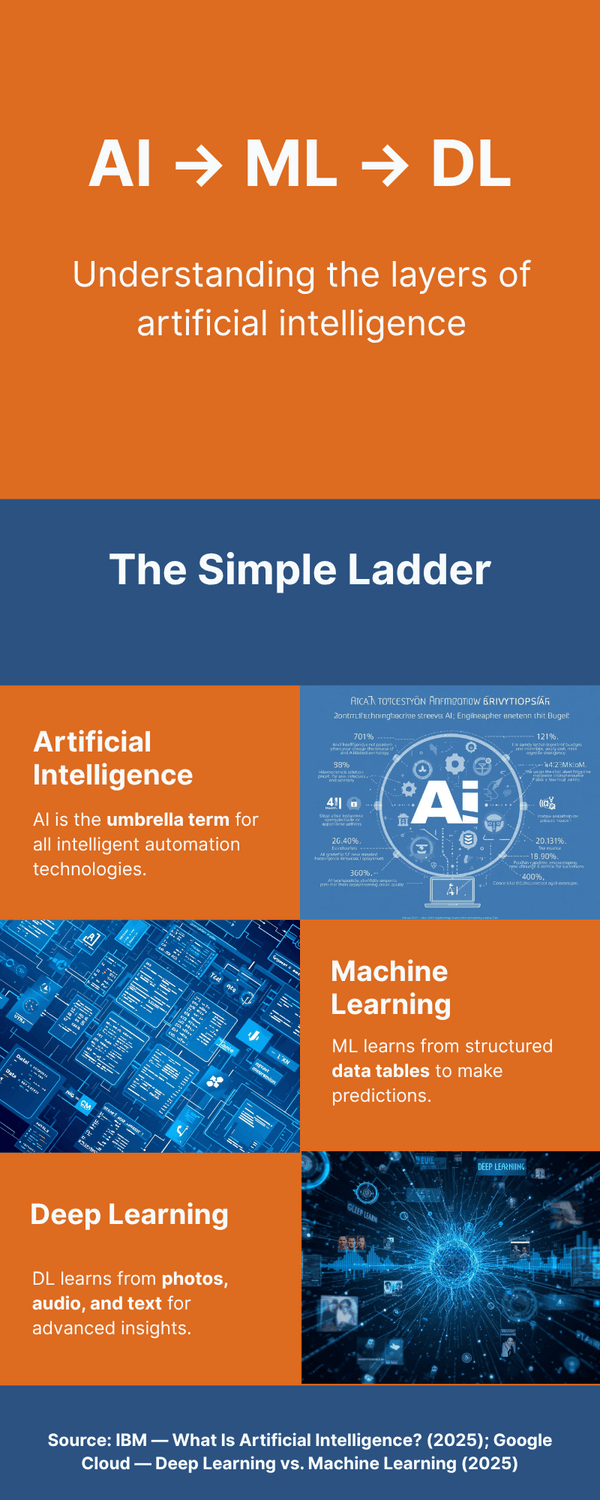

Plain definition: AI is any computer system that can perform tasks we usually associate with human thinking, like recognizing patterns, making decisions, solving problems, or generating language. It can be simple or sophisticated. A basic spam filter that follows clear rules counts as AI. So does a conversational assistant that can answer questions in plain English. The umbrella is wide. As IBM explains, AI covers everything from rule‑based programs to learning systems that improve with data (IBM, 2025).

Why this matters to you: When you know AI is the big umbrella, you can place everything else in context. Machine Learning sits inside AI. Deep Learning sits inside Machine Learning. That nesting helps you ask smarter questions. Is this tool using fixed rules or is it learning from my data? What kind of data does it need? Those questions lead to better choices about privacy, accuracy, and value for your time.

Where you already meet AI:

Recommendations that feel oddly on point, like what to watch next or which product fits your budget. Netflix‑style systems are classic AI in action and have been credited with major revenue lift thanks to personalization (Exploding Topics, 2025).

Assistants on your phone or in your home that answer quick questions, set reminders, and control lights.

Smartphone photos that group people and places, or auto‑enhance low‑light pictures.

Much of this runs quietly. In fact, a large share of everyday devices now include AI features, which is why it can feel invisible until something gets your attention (DemandSage, 2025).

Two helpful distinctions:

Rules vs. learning. Some AI follows hand‑written rules. Other AI learns patterns from data and improves over time. Both live under the AI umbrella.

Structured vs. unstructured data. Some tasks use neat tables of numbers. Others use messy data like photos, audio, or video. The more complex the data, the more likely advanced approaches are involved. Columbia Engineering offers a clear breakdown of how these families relate (Columbia University, 2025).

A few grounding questions to ask yourself:

What decision is this AI helping me make right now, and is that useful?

What data does it need, and do I feel comfortable sharing that?

If it gets something wrong, how easy is it to correct?

It’s normal to feel cautious. You might be wondering, “Do I need to understand the math?” You don’t. You only need the big picture so you can spot benefits, set boundaries, and get help when you want it.

The takeaway: AI is the big umbrella for computer systems that mimic parts of human thinking. Knowing that frame makes the next two sections easy: Machine Learning is how many systems learn from data, and Deep Learning is the advanced approach that handles complex, messy information.

How is Machine Learning (ML) different?

Imagine you’re checking your bank app after the weekend. A small alert pops up: “Was this purchase yours?” It’s not a random warning. Behind the scenes, a model has learned your normal spending pattern and spotted something that doesn’t fit. That learner is Machine Learning.

Plain definition: ML is a way of building systems that learn from data instead of following a long list of hand‑written rules. Give the model many examples, let it find patterns, then use those patterns to predict or classify new cases. In other words, we don’t program every step; we train the system on historical data so it can generalize. IBM offers a clear overview of this learn‑from‑data approach within the broader AI umbrella (IBM, 2025).

Where ML shines: ML does its best work with structured data—things like spreadsheets, transaction logs, sensor readings, and labeled tables. Because the inputs are tidy and well defined, the model can compare like with like and surface patterns we’d miss at a glance. Columbia Engineering summarizes this relationship neatly: AI is the big idea, ML is a major method inside it (Columbia University).

A practical example you already feel:

Fraud detection. Classic ML models analyze features such as amount, merchant type, time of day, location, and your past behavior. They learn what “normal” looks like for you and flag deviations in real time. Reviews of financial fraud detection show how models like gradient boosting, support vector machines, and classic neural nets reduce false alarms while catching more true fraud, especially when features are engineered by experts (MDPI, 2022; Appinventiv).

What’s happening under the hood? Two ingredients matter most:

Features. Humans decide which columns matter. For email spam, that could be word counts, sender domain, or subject patterns. For banking, it might be merchant category or distance from your usual locations.

Training and testing. The model learns on past data, then gets checked on new data to see if it really understands the pattern.

You might be wondering, Do I need to tune any of this myself? Usually, no. Your bank, email provider, or favorite app handles the setup. How do I stay in control? Ask simple, direct questions: What data is used? Can I review flagged items? Can I opt out? Those are fair questions, and reputable providers should answer them.

Why it matters to you: ML improves everyday tools without asking you to change your routine. It trims inbox spam, blocks sketchy logins, and tags photos faster. It can also save time at work by sorting tickets, predicting delays, or recommending the next best action. McKinsey reports that organizations are now applying AI methods like ML across multiple functions as adoption surges (McKinsey, 2024).

But what about messy data like photos, sound, or video, where features aren’t obvious? That’s where Deep Learning steps in.

The takeaway: ML sits inside AI and learns from structured data to make useful predictions. Humans still shape the features and the guardrails. When data gets too complex for hand‑picked features, you’ll meet Deep Learning next.

What makes Deep Learning (DL) unique?

Think about your photo library. Your phone can spot a familiar face across years of pictures, even if the person is wearing glasses or standing in bad light. That kind of pattern recognition is a hallmark of Deep Learning.

Plain definition: Deep Learning is a specialized part of machine learning that uses multi‑layer neural networks to learn directly from raw, messy data like images, audio, or video. Instead of humans hand‑picking features, the network discovers the right clues on its own as it moves through many layers of abstraction.

Google Cloud offers a clear comparison: classic ML relies more on feature engineering, while DL automatically extracts features from complex data (Google Cloud). NVIDIA explains this leap was powered by large datasets and modern processors that made training deep networks practical (NVIDIA).

Why this matters: Photos, voices, and movement data don’t fit neatly in a spreadsheet. DL excels when real life is messy. It powers the apps you feel every day: photo categorization, voice dictation, real‑time language translation, and the smarter parts of virtual assistants. It also underpins many generative tools that can summarize text, draft messages, or help you brainstorm.

A health and independence example:

Fall detection and prevention. Cameras and wearables stream continuous signals about posture and gait. A deep network can sift through those raw pixels and motion patterns to flag a rising risk before a fall happens. That is proactive help, not just an alert after the event. Research on aging and AI highlights how such systems support independence and peace of mind for older adults and caregivers (Oxford Academic, 2025) (Oxford Academic, 2025).

What’s different under the hood?

Automatic feature learning. Instead of engineers specifying “look for edges” or “measure stride length,” deep networks learn those signals internally as they pass data through many layers (Google Cloud).

Scale improves results. With more data and training time, DL models often keep getting better, especially on images, speech, and language tasks (NVIDIA).

Works with unstructured data. Photos, audio, free‑text, and sensor streams are DL’s home turf.

You might be wondering, Do I need to set up any of this? No. The value shows up inside services you already use: clearer photos, more accurate captions, smoother voice commands. Is it a black box? It can feel that way, which is why many providers invest in explainable methods and practical controls you can see and adjust.

When does DL matter to you?

If you rely on your camera to sort people and places.

If you use voice to dictate messages or search hands‑free.

If you or a loved one benefit from health or home‑safety monitoring that quietly watches for problems and alerts early (Oxford Academic, 2025) (Oxford Academic, 2025).

Two quick questions to stay in control: What data types does this feature use (images, audio, movement)? Can I review, delete, or opt out of data collection? Those are fair, simple checks that keep you in the driver’s seat.

The takeaway: Deep Learning is the engine for complex, unstructured data. It learns features automatically and improves with scale, which is why it powers photo recognition, speech, and many health‑safety tools. You get the benefits in everyday apps, while good settings and privacy controls help you keep it comfortable.

Why should you care about the differences?

Let’s bring this down to the kitchen table. You’re choosing a new app, a smartwatch feature, or a bank setting. You see “AI‑powered” everywhere, but that label alone doesn’t tell you how it works or what it needs from you. Knowing the difference between AI, ML, and DL helps you ask better questions and protect your time, money, and privacy.

Start with the big picture. AI is the umbrella idea: software that mimics parts of human thinking—recognizing patterns, making decisions, and generating language IBM (2025). Inside that umbrella, Machine Learning is how many systems learn from structured data such as tables and logs, and Deep Learning is the more advanced approach that handles images, audio, and text by learning directly from raw data Google Cloud (2025); Columbia Engineering (2025).

What changes when you understand this? You stop guessing. For example, if a bank offers smarter fraud alerts, you can assume ML is analyzing structured transaction patterns and ask: What features are used? Can I review and correct false alerts? Reviews show ML models catch subtle risks while reducing false alarms when features are chosen carefully MDPI (2022); Appinventiv (n.d.).

If a health app promises early fall‑risk detection from camera or wearable data, that’s DL on unstructured signals. Now you can ask: What raw data is captured? Can I delete it? Is processing done on my device or in the cloud? Research on aging and independence highlights how DL‑enabled monitoring can support safer, more independent living Oxford Academic (2025).

Confidence grows with experience. You might be wondering, Do I have to be tech‑savvy to benefit? No. Studies show trust rises sharply after hands‑on use, especially among adults 50+ National Poll on Healthy Aging (2025). At work, organizations are rolling out AI across multiple functions, which means the tools you touch are getting smarter—and clearer about settings—over time McKinsey (2024).

Practical ways this helps you today:

Money: Turn on ML‑based fraud and login alerts in your banking and email settings. Review flagged items regularly. It takes minutes and can save a headache MDPI (2022).

Photos and voice: If you value convenience, leave DL features on for better auto‑albums and dictation. If privacy matters more, check what’s stored and disable cloud backups you don’t need Google Cloud (2025).

Health and home: For fall detection or smart cameras, look for clear privacy controls, local processing options, and the ability to review or delete recordings Oxford Academic (2025).

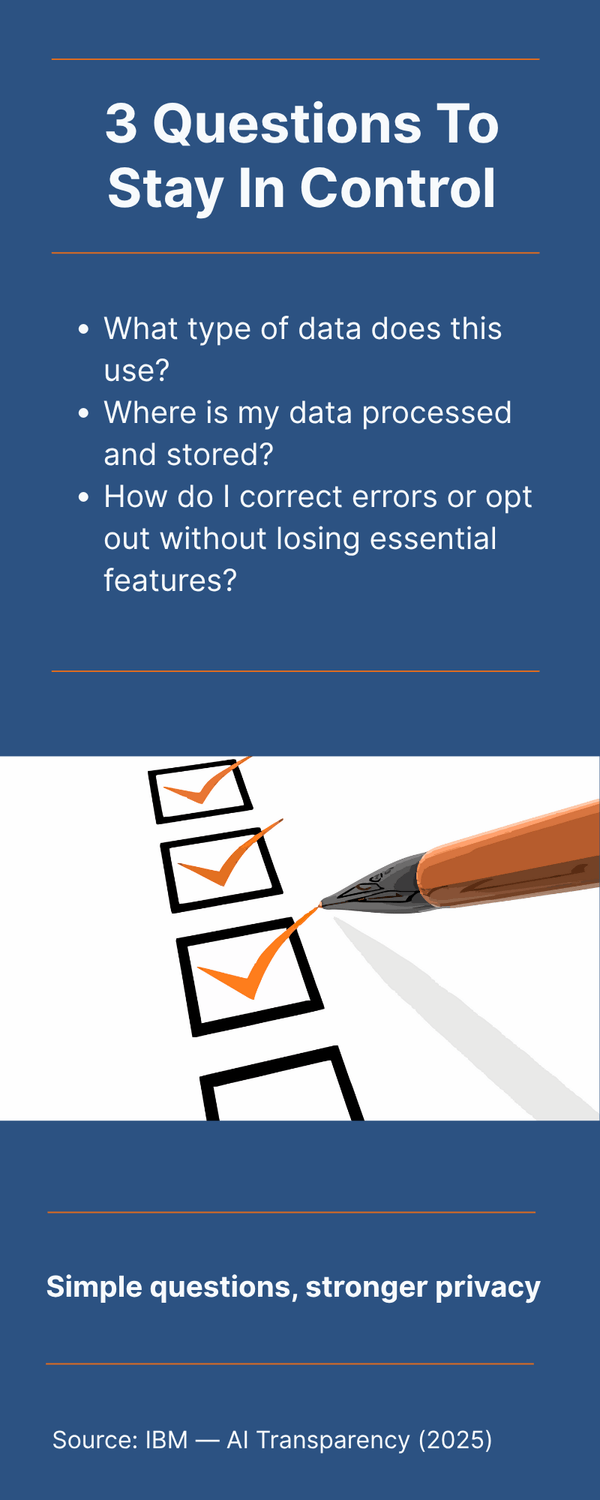

Three quick questions to keep handy:

What type of data does this use—neat tables or raw images/audio? (ML vs. DL.)

Where is my data processed and stored—on device or cloud?

How do I correct errors or opt out without losing essential features?

It’s normal to feel cautious around new tech. That’s a fair instinct. But clarity turns caution into control. When you can name what’s happening—AI at the top, ML for structured patterns, DL for complex signals—you choose settings with confidence and use the help that truly helps.

The takeaway: Understanding the AI‑ML‑DL ladder lets you ask sharper questions, choose sensible privacy settings, and focus on benefits you care about (security, convenience, and independence).

Common misconceptions: Are AI, ML, and DL just buzzwords?

Picture a family chat at Sunday lunch. Someone says, “It’s all just AI,” while another insists, “No, it’s machine learning.” Who’s right? Both are touching the elephant from different sides. Let’s clear up the most common mix‑ups so you can speak about this with calm confidence.

Myth 1: “AI, ML, and DL are the same thing.”

They’re related, not identical. Think of AI as the big idea: systems that mimic parts of human thinking (reasoning, decision‑making, pattern recognition) (IBM, 2025). Machine Learning (ML) lives inside AI and learns patterns from data rather than following a long list of rules (Columbia Engineering). Deep Learning (DL) is a specialized form of ML that uses multi‑layer neural networks to learn directly from raw images, audio, and text; no hand‑picked features required (Google Cloud).

Myth 2: “Deep Learning is always better.”

Not necessarily. DL shines with messy, unstructured data (photos, video, speech). But for clean, tabular data (think spreadsheets or transaction logs), classic ML is often simpler, cheaper, and easier to interpret. Reviews in finance show that well‑engineered ML features can catch fraud effectively while keeping false alarms down (MDPI, 2022). So, ask: Is my data neat tables or raw media? Pick the right tool for the job.

Myth 3: “If it’s AI, it must be a robot taking jobs.”

Most systems today are narrow AI—they do one task well, like flagging spam or transcribing audio. Leaders in the field consistently frame AI as augmentation, not replacement: it handles heavy pattern‑finding so people can focus on judgment, empathy, and creativity (Stanford GSB / Andrew Ng). You might be wondering, Will this make my work irrelevant? A more practical question is: Which parts of my work are repetitive and could be sped up, so I can spend more time on the parts only I can do?

Myth 4: “It’s a black box, so it can’t be trusted.”

It can feel opaque. That’s why responsible providers invest in transparency and explainability; plain‑English summaries, model cards, and controls you can actually use. If a feature affects important decisions (money, health, safety), look for clear disclosures and options to review or correct outputs. Guidance on transparency is becoming standard practice across the industry (IBM—AI Transparency).

Myth 5: “More data is always better.”

Sometimes less is smarter. Good systems aim for data minimization; collect what’s necessary, not everything. Your role? Choose settings that match your comfort. Do you want on‑device processing when available? Auto‑delete after 30 days? Those are sensible, privacy‑first defaults.

A quick micro‑checklist for conversations and decisions:

Which bucket are we in? (AI umbrella, ML with structured data, or DL with images/audio/text.)

What data types are used and where are they processed? (Device or cloud.)

How do I review, correct, or opt out? (Easy controls inspire trust.)

It’s normal to feel cautious, especially if past tech changes felt rushed. That’s a fair concern. The antidote is clarity: name the bucket, know the data, and insist on controls. With that, these terms stop sounding like buzzwords and start working for you.

The takeaway: AI is the umbrella, ML is the learn‑from‑tables workhorse, and DL is the power tool for messy media. Use the right label, ask the three control questions, and you’ll steer the conversation and your settings with confidence.

To conclude...

You don’t need a computer science degree to understand the trio. Think of AI as the umbrella, Machine Learning as the learn‑from‑data engine, and Deep Learning as the power tool for photos, audio, and text IBM (2025); Google Cloud (2025). When you can name which one you’re using, you ask better questions about data, accuracy, and control.

That clarity helps you choose settings that protect your money, your privacy, and your time. And the more you try these tools in low‑risk ways, the more your confidence grows, especially if you’re 45+ and value practical wins over tech jargon National Poll on Healthy Aging (2025); Pew Research Center (2025).

So, your next step? Want a hand getting started? Download my free AI Quick-Start Bundle. It is a step‑by‑step resource designed for beginners who want to use AI wisely, without the overwhelm.

Frequently Asked Questions

1) Do I need to be tech‑savvy to benefit from AI, ML, and DL?

No. Start with the big picture and use tools that clearly explain what they do. Confidence grows with hands‑on use, and older adults who try AI report higher trust than those who have never used it National Poll on Healthy Aging (2025).

2) Which one am I using in daily life?

Often all three. Your bank’s fraud alerts usually rely on ML that analyzes transaction patterns, while your phone’s photo features and voice dictation often rely on DL. All of this sits under the AI umbrella IBM (2025); MDPI (2022); Google Cloud (2025).

3) Is Deep Learning a black box I shouldn’t trust?

It can feel opaque, which is why responsible providers invest in transparency and clear settings you can review. Look for plain‑language explanations, data controls, and opt‑out options IBM (AI Transparency) (2025).

4) How do I keep control of my data when using AI features?

Ask three simple questions: What types of data are used? Where is processing done (device or cloud)? How can I review, correct, or delete data? Choose on‑device processing and auto‑delete options when available. This keeps benefits high and exposure low Google Cloud (2025).

5) Will AI replace my job?

Most tools today are narrow assistants that automate repetitive tasks so people can focus on judgment, empathy, and creativity. Leaders describe AI as augmentation, not replacement Stanford GSB (n.d.).

Sources:

IBM — What Is Artificial Intelligence? (2025).

IBM — AI vs. Machine Learning vs. Deep Learning vs. Neural Networks (2025).

Google Cloud — Deep Learning vs. Machine Learning (2025).

MDPI — Financial Fraud Detection Based on Machine Learning: A Systematic Literature Review (2022).

Oxford Academic (The Journals of Gerontology: Series A) — Aging With Artificial Intelligence: How Technology Enhances Older Adults' Health and Independence (2025).

National Poll on Healthy Aging — How Older Adults Use and Think About AI (2025).

Pew Research Center — How Americans View AI and Its Impact on People and Society (2025).

McKinsey — The State of AI in Early 2024: Gen AI Adoption Spikes and Starts to Generate Value (2024).

Stanford Graduate School of Business — Andrew Ng: Why AI Is the New Electricity (n.d.).

Appinventiv — An Analysis on Financial Fraud Detection Using Machine Learning (n.d.).