How AI Works in Plain Language (For Adults 45+)

By Rado

AI talk can feel like alphabet soup. You see headlines, then a chatbot pops up when you just want a person. Here’s the short version: AI is pattern-spotting software that predicts likely answers. In this guide, you’ll learn the simple idea behind it, where it shows up in daily life, and how to use it with control and privacy in mind.

1) What is AI in plain language?

Imagine you’re sorting a shoebox of mixed photos: holidays, grandkids, garden projects. After a while, your brain spots patterns: “beach photos,” “birthday cakes,” “the red jacket.” You start predicting where each new photo belongs. That’s the simplest way to think about AI. It’s software that spots patterns in lots of examples and then makes a quick best guess.

Here’s the short version: AI is like smart autocomplete. It doesn’t “think” like a person. It predicts what’s likely next based on what it has seen before. When your email suggests a reply, when your bank flags a weird transaction, or when your phone groups faces in the Photos app; that’s AI using patterns to help.

You might be wondering, “So is it intelligent or just good at guessing?” Fair question. AI is powerful pattern‑matching with rules learned from data, not common sense or life experience. It can write a draft, recognize a face, or spot a duplicate charge but it doesn’t understand birthdays, grief, or why that charge matters to you. That’s your role.

So how does it work day to day? Think of three steps:

Learn from examples. Feed the system many labeled examples (spam vs. not spam). It studies the differences.

Create a recipe. From those examples, it builds a compact “recipe” (a model) that captures the telling patterns.

Make predictions. With a new email, it applies the recipe and decides: likely spam or not.

Now, why does it sometimes get things wrong? Because predictions aren't truth. If your habits change or the examples were limited or biased, the guesses can slip. That’s normal and it’s why a human review (yours) still matters. It’s also why good tools let you correct them; feedback helps future predictions.

You might ask, “Do I have to be technical to benefit?” Not at all. Start with familiar helpers you already use: spam filters, photo search (try typing “dogs” or “sunset”), or voice assistants for simple reminders. Each one is a pocket‑sized example of pattern‑spotting doing useful work.

It’s normal to feel cautious. You stay in charge by setting limits: choose reputable apps, adjust privacy settings, and double‑check important results. Use AI as the diligent assistant that drafts, sorts, and suggests while you decide.

Mini tip: When you try an AI tool, tell it the goal and give one clear example of what good looks like. You’ll be surprised how much that improves the outcome.

The takeaway: AI = pattern‑spotting software that makes educated guesses. It’s great at helping; you keep the judgment and the off switch.

2) How does AI “learn” without being human?

Picture a Saturday at the kitchen table. You’re sorting a messy stack of receipts into piles: groceries, gas, travel. After a few minutes, your brain speeds up. You notice patterns. You stop reading every word and start glancing for clues, like the store name or a total that “looks right.” That is very close to how AI learns from data: it studies lots of examples, then gets good at spotting patterns and making fast, reasonable guesses.

Modern AI is mostly machine learning. We give software thousands or millions of examples and it tunes its internal settings to score well on a task, like “is this email spam?” or “what word likely comes next?” In training, the system guesses, gets told how far off it was, then adjusts and tries again.

Repeat this cycle over and over, and the guesses improve. Google explains this idea clearly in its overview of artificial intelligence, which defines AI as systems that analyze data and make predictions based on learned patterns (Google Cloud, n.d.). IBM describes the relationship this way: AI is the big tent, machine learning is a subset, and deep learning is a further subset that handles complex data like images and audio (IBM, n.d.).

You might be wondering, “What’s inside the box that actually changes?” Think of millions or even billions of tiny dials called parameters. During training, the system turns those dials a little at a time to reduce mistakes. With language models, that tuning teaches the model to predict the next likely word in a sentence, which is why they feel like very smart autocomplete. Google Developers gives a friendly primer on these large language models and how they learn from huge text collections (Google Developers, n.d.). Another way to picture it is a giant library compressed into a single, searchable file that helps the model make quick, context-aware guesses (AltexSoft, n.d.).

Does more data always mean better results? Often yes, up to a point. More varied examples help the model see edge cases and reduce weird mistakes. But there are limits. If the examples are one‑sided or outdated, the model will pick up those blind spots. That is why trustworthy tools publish what they train on, how they test, and where the model tends to miss. It also explains why your judgment still matters. You decide when to accept a suggestion and when to ask for a source.

It’s normal to ask, “Do I have to understand the math?” No. You just need the big picture. Think examples in, guesses out, with steady feedback in the middle. And remember the rule of thumb: prediction is not the same as truth. When the stakes are high, verify numbers, ask for citations, and keep a human in the loop.

The takeaway: AI “learns” by tuning lots of small dials after seeing many examples. It predicts what fits the pattern, which is useful, but your judgment and verification stay in charge.

3) What’s a Large Language Model, really?

You open your laptop to write a tricky email. You type the first few words and your email app suggests the next phrase. Now scale that up. A Large Language Model (LLM) is like a supercharged autocomplete trained on a huge library of text so it can predict likely words and sentences with surprising fluency.

Here is the simple idea. An LLM breaks text into small pieces called tokens. During training, it reads billions of tokens and learns to predict the next one based on the ones before it. Inside the model are many tiny adjustable settings called parameters. Training adjusts those settings so the model gets better at guessing what comes next. When you ask a question later, the model uses that trained pattern memory to generate a response. Think patterns in, likely words out.

What makes LLMs feel helpful? They are excellent at language tasks. They can summarize long notes, clean up grammar, shift tone, draft emails, outline plans, and brainstorm options. They can also translate, extract key points, and write simple code. You might be wondering, “Does it understand the world or only the words?” That is a fair question. LLMs model language patterns. They do not have life experience or common sense in the human sense. This is why they sometimes sound confident while being wrong. Your judgment is still the safety net.

A few dial settings help you shape results without any math. The temperature setting controls how adventurous the wording is. Lower values keep the reply more predictable. Higher values make it more creative. The context window is how much text the model can consider at once. Give it the right background, examples, and constraints, and quality jumps. Clear inputs lead to clear outputs.

So, what are the limits? First, prediction is not the same as fact. If the training data was thin or biased on a topic, the answers can drift or invent details. Second, models do not automatically know current events unless connected to a tool that can browse or fetch trusted data. Third, long tasks may require step by step prompts so the model does not lose track.

How do you use this in daily life without getting lost in settings? Start with concrete tasks. Ask an LLM to shorten a draft email to three sentences. Paste a meeting transcript and ask for a bullet summary with action items. Give a recipe and ask for a shopping list for two people. Small, clear jobs build confidence fast.

It is normal to feel cautious. Begin with low stakes tasks, always review the output, and add a quick source check when facts matter. Treat the model as a fast first draft, not a final decision maker.

Mini tip: When you want a specific style, paste a short example and say, “Match this tone.” The model will mirror your sample with much better accuracy.

The takeaway: An LLM is pattern trained autocomplete for language. It predicts likely words, which makes it great for writing and summarizing, while you supply facts, context, and final judgment.

4) How does AI go beyond text to actually “do things”?

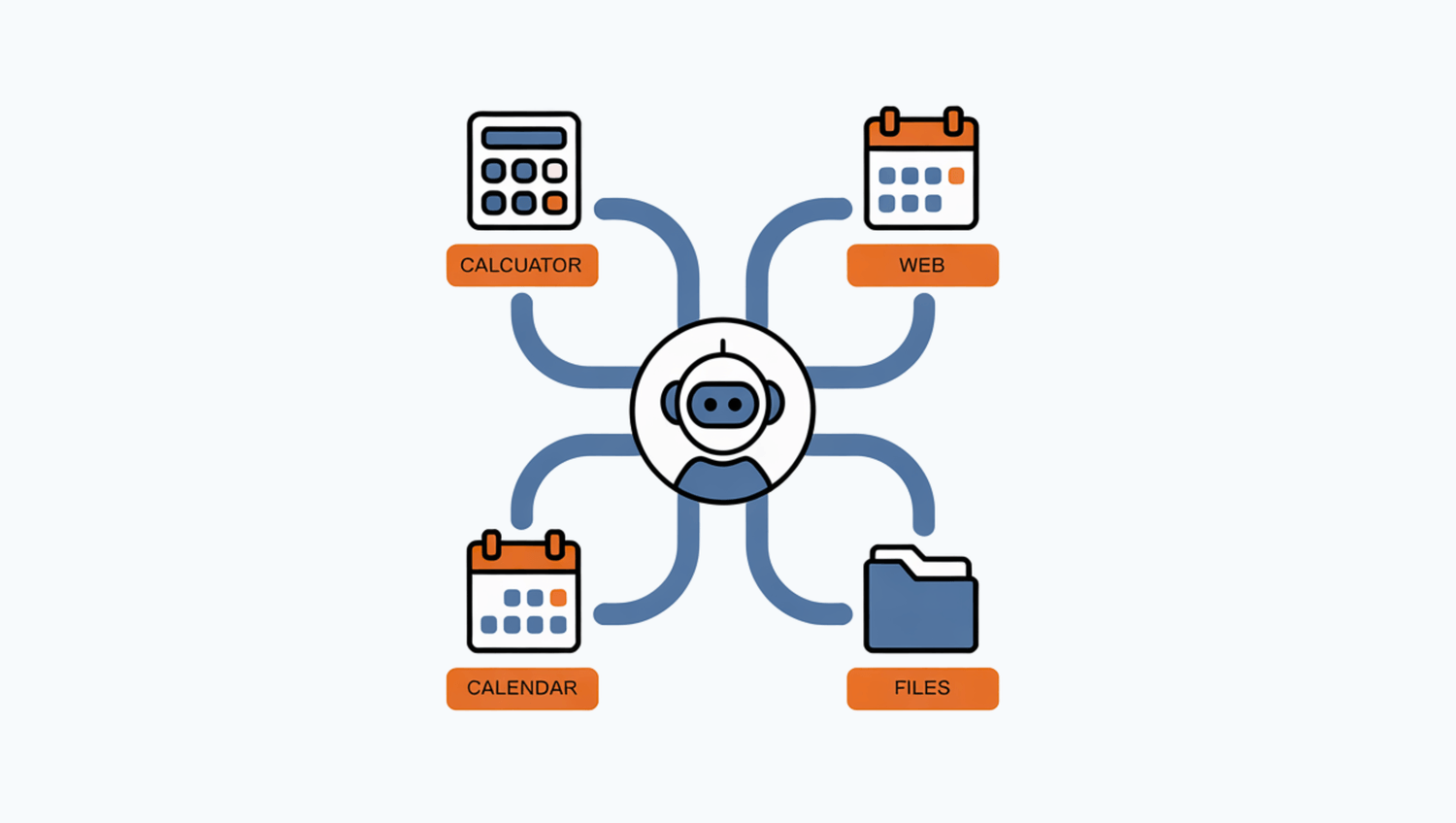

Imagine asking a smart assistant, “What is 17% of 482, and can you put the result into my budget sheet?” A plain language model might guess at the math. A well‑set‑up assistant will call a calculator, get the exact number, then send it to your spreadsheet tool. In short, the model writes words, but it can also call tools that take action.

Think of the AI as a helpful switchboard. It listens to your request, figures out which tools are needed, and routes the job. The writing happens in one place. The doing happens in connected apps. Why does this matter? Because tools reduce guesswork. A calculator removes math errors. A web fetch grabs the current store hours. A calendar tool adds the appointment at the right time.

Here are common tool types you might see:

Calculator tools. Great for taxes, tips, unit conversions, and simple budgets. You get precise numbers, not estimates.

Web lookup tools. Useful for facts that change, like opening hours or this year’s tax brackets. You can also ask for the source so you can check it.

File tools. These read, summarize, or extract information from PDFs, spreadsheets, or slides. Handy for long statements or lecture notes.

Productivity tools. Add tasks, draft calendar events, generate email text you can paste, or create a table you can copy into Excel or Google Sheets.

Code tools. For power users, these can shape data, make quick charts, or transform a messy CSV into something clean.

You might be wondering, “Isn’t that risky? Can it click around my computer without me?” That is a fair concern. Good assistants ask permission before using outside tools and show you what they did. You stay in control by reading the preview, checking the numbers, and only pasting results you trust.

What does this look like in daily life? Try these simple starters:

• “Calculate the mileage cost for 246 kilometers at $0.39 per km, then format a two‑column table I can paste into my expense sheet.”

• “Find the latest opening hours for the passport office in Bratislava and list the link you used.”

• “Summarize this 9‑page PDF into five bullets and surface any dates or amounts I should calendar.”

• “Turn these weekend plans into a calendar schedule with times and a packing checklist.”

It is normal to feel cautious with anything that touches your data. Start small, use non‑sensitive examples, and watch how the assistant handles sources and calculations. As your confidence grows, you can connect more tools, while keeping your privacy settings tight.

Mini tip: When you want action, include verbs and outputs. Say, “Calculate, then format as a table,” or “Look up, then give the source link.” Clear steps lead to cleaner results.

The takeaway: Language models write. Connected tools do. When an assistant can calculate, look up, and format, you get fewer mistakes and more useful work, while you keep final control.

5) Why does AI sometimes make mistakes or “hallucinate”?

You open a travel site to plan a long weekend. You ask an AI helper for museum hours and a shortlist of must‑see exhibits. It gives a smooth answer, but one museum is closed on Mondays and the list includes a gallery the city retired last year. What happened? The model predicted a likely‑sounding reply instead of checking today’s facts.

Here is the core idea. A language model predicts the next likely word based on patterns in its training data. It does not know truth by itself. If the topic changed after training, or if your prompt is vague, or if the model never saw your exact case before, it can confidently produce a wrong answer. People call that a “hallucination.” A clearer term is fabricated detail.

Common causes you will see in the real world:

Outdated or incomplete data. Training snapshots freeze time. Without a browsing or lookup tool, the model cannot see updates.

Thin context. If you give two lines of background for a complex task, the model will fill gaps with guesses.

Ambiguous instructions. Vague prompts invite vague or invented answers. Clear constraints shrink the guesswork.

Made‑up citations or quotes. The model knows the style of references, not which ones are real unless it can search and verify.

Numbers without a calculator. Pure text models may mishandle math or unit conversions unless a calculator tool is used.

Domain edge cases. Niche medical, legal, or financial questions require verified sources and expert review.

You might be wondering, “So how do I reduce these mistakes?” That is a good question. Try this simple playbook that echoes guidance from the NIST AI Risk Management Framework (2023) and practical safety notes from the OECD AI policy observatory:

Provide context up front. State the goal, audience, and constraints. Example: “Create a 120‑word summary for older adults, in plain English, no sales language.”

Ask for sources. Say, “List the sources with links you used.” Then click through and skim. If no sources are given, treat the answer as a draft.

Prefer tools for facts and math. Request a calculator for totals or a web lookup for hours, prices, or policies.

Set the format. Ask for a table, bullet points, or step by step instructions. Structure makes errors easier to spot.

Test with a known answer. Give the model an example where you already know the solution. This reveals its accuracy before you trust it.

Run a quick second pass. Ask, “Double‑check the numbers and highlight any uncertainties.” Models can self‑review and often catch slips.

It is normal to feel cautious, especially with money, health, or legal topics. Keep human oversight in place. For high‑stakes decisions, confirm details with primary sources, or consult a qualified professional. Treat the model as a speedy first draft that must be verified, not a final authority.

Mini tip: When facts matter, add the phrase “If you are not sure, say you are not sure.” You will get fewer polished guesses and more honest flags.

The takeaway: The model predicts patterns, not truth. Give better context, ask for sources, use tools for math and current facts, and keep your judgment in charge.

6) Where are you already using AI today?

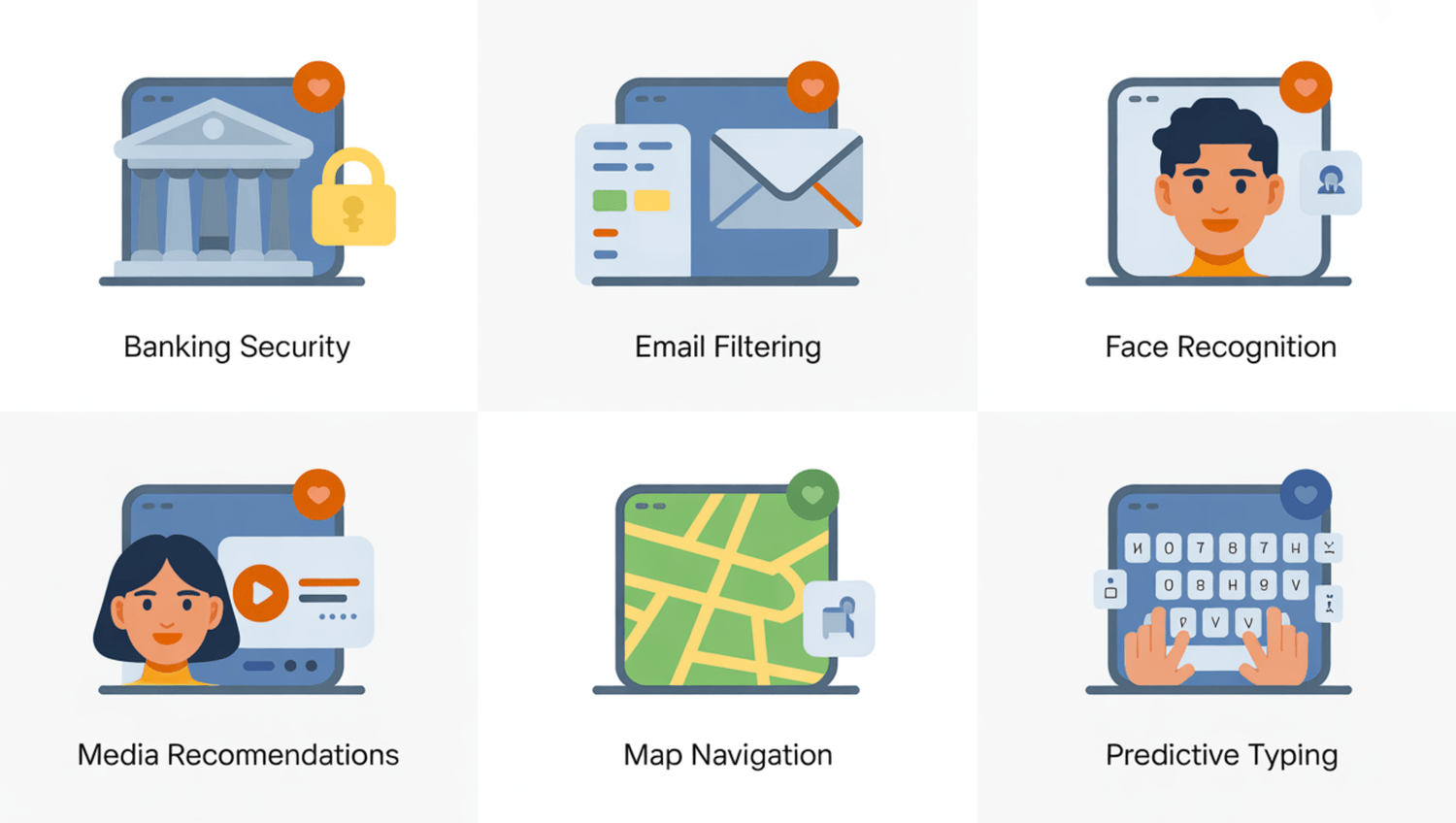

You open your bank app and see an alert about a charge that looks odd. A moment later you find a stack of emails and notice most of the junk never reached your inbox. Then your phone neatly groups photos of your granddaughter across different months. You did not flip any special switch. Quiet AI is already working in the background.

Start with money and safety. Banks use pattern spotting to flag transactions that do not fit your usual habits. Email providers do something similar with spam and phishing. They learn the look and feel of risky messages and send them to the junk folder before you ever see them. You might be wondering, “Is this perfect?” No. False alarms and misses happen, which is why your review still matters. But these systems catch a lot of trouble before it reaches you.

Next, photos and memories. Modern photo apps can sort by faces, places, or things like beach, dog, or birthday cake. The software learns from many examples of each category, then makes a fast guess on your camera roll. It can even clean up blurry shots or remove background noise in a video. Apple and Google both share easy overviews of how on device learning powers these features while giving you controls for what to keep or delete (Apple (2023); Google (2023)).

Entertainment is another everyday example. Streaming services study what you watched, paused, and rated. They suggest what to try next, which saves time but can also create a narrow tunnel of similar shows. A quick tip is to mix in manual picks so the system keeps your tastes broad. Shopping sites do the same with product recommendations, which can be helpful if you are comparing options, and annoying if you are done buying. You can clear or pause history in your account settings.

Smart home tools bring AI into your hallway and kitchen. A video doorbell can spot packages or familiar faces. A thermostat can learn when you are usually home and adjust to save energy. Voice assistants can set timers, remember shopping lists, or control lights. It is normal to ask, “Is my data safe?” That is a fair question. Look for clear privacy dashboards, the ability to erase voice history, and simple ways to turn features off.

Even maps and typing use AI in quiet ways. Navigation apps predict traffic and reroute in real time. Your phone’s keyboard predicts your next word and corrects typos as you go. Search boxes suggest queries before you finish typing. Small helpers add up and remove friction from your day.

Mini tip: When a service shows you a recommendation, click “Not interested” or “Show me more like this.” Simple feedback teaches the system and keeps suggestions useful.

The takeaway: You already use AI in banking alerts, spam filters, photo sorting, recommendations, maps, and smart home tools. It saves time and adds safety while you keep control through settings and simple feedback.

7) Can AI actually keep you safer and more independent?

Imagine you are heading out for a walk. Your watch knows your usual route and reminds you that rain is likely in the next hour. Later, it nudges you to take your medication, then notices a sharp stumble and asks if you are OK. If you do not respond, it can alert a trusted contact. None of this replaces common sense or medical care. It does add a quiet layer of safety that many people find reassuring.

Start with money safety. Banks use pattern spotting to flag charges that do not match your normal habits. When a card is used in a new country or at odd hours, fraud systems look for clusters of risky signals and ask you to confirm. The U.S. Federal Trade Commission explains common fraud patterns and how alerts can reduce losses when you act quickly (FTC, 2024). Your role is simple. Keep notifications on, respond fast, and use two‑factor authentication in your banking app.

Now health and independence. Wearables can detect falls, track heart rhythm irregularities, and prompt regular movement if you sit too long. Apple describes how fall detection and emergency SOS work together so you can contact help even without reaching your phone (Apple Support, 2024). Google shares how Android and Fitbit devices offer similar features, including irregular rhythm notifications and safety check prompts (Google Safety, 2024). These tools are not diagnoses. They are early nudges that help you decide when to call a clinician.

Medication and routines are another win. Simple reminder apps can schedule doses, track streaks, and alert you when a refill is due. Many let you share adherence summaries with a caregiver. The National Institute on Aging offers plain‑language tips on building medication routines and using reminders to stay on track (NIA, 2023). You might be wondering, “Will this feel intrusive?” That is a fair question. Start with the least data needed, review what is shared, and turn features on step by step.

Home and travel safety benefit too. Smart cameras can spot packages or motion while filtering routine activity to reduce false alarms. Voice assistants can create safety checklists for trips, share your location with a chosen contact, or turn lights on at sunset when you are away. The trick is to keep controls simple. Choose devices with clear privacy dashboards and a big, obvious off switch.

Practical guardrails help you stay in charge:

Use strong passwords and two‑factor authentication on all accounts that touch money or health data.

Review alerts weekly. Mark false alarms and confirm real ones so systems learn your patterns.

Prefer tools that let you see, export, and delete your data.

Share access only with people you trust, and review sharing every few months.

It is normal to feel cautious when tools touch sensitive parts of life. Start small, test features in low‑risk situations, and keep your clinician and family in the loop for health and safety decisions.

Mini tip: If a device or app cannot explain what it collects, why it collects it, and how you can turn it off, pick a different one.

The takeaway: AI can support safety and independence through fraud alerts, fall detection, and timely reminders. You stay in control by choosing reputable tools, tightening privacy settings, and responding quickly to alerts.

8) How do you stay in control of privacy and data?

Let’s keep this practical. You do not need to be a tech pro to protect your data while using AI tools. A few habits make a big difference, and you can set them up in less than an hour.

Start with strong locks. Use a password manager so each account has a unique, long password. Turn on two factor authentication for email, banking, cloud storage, and any AI app that stores your files. If your device and service support passkeys, use them. The U.S. Cybersecurity and Infrastructure Security Agency gives clear guides on these basics and why they stop most account takeovers (CISA, 2024).

Share less by default. Before you paste anything into an AI tool, ask yourself, “Would I be comfortable if this appeared on a postcard?” If the answer is no, remove names, addresses, or medical details. Many tools offer a privacy or incognito mode for sensitive work. Choose that when drafting contracts, health notes, or anything you would not want stored.

Look for transparency. Reputable services explain what data they collect, how long they keep it, and how you can delete it. The European Data Protection Board outlines your rights to access, correct, and erase data under the GDPR, which many global services follow even outside the EU (EDPB, 2023). The UK Information Commissioner’s Office offers plain language checklists for picking tools with clear privacy controls (ICO, 2023).

Tidy your browsing. Use a modern browser with tracking protection and update it monthly. Review cookie prompts, pick “necessary only” when possible, and clear site data for services you no longer use. Consider a separate browser profile for work and another for personal use so sites do not cross pollinate your history.

Use local options when you can. If a feature works on device, try that first. For example, on device transcription or photo recognition can give you the benefit without sending files to a cloud server. Apple and Google both describe which features can run locally and how to turn them on or off (Apple, 2023 and Google, 2023).

Control sharing with people too. It is normal to add a spouse or adult child to accounts for backup. Keep the list short, review it every few months, and remove anyone who no longer needs access. For health or legal items, keep a simple document that lists what is shared and with whom.

You might be wondering, “How do I spot a risky service?” That is a fair question. Red flags include no privacy policy, no way to export or delete your data, and pressure to upload ID documents without a clear reason. If a company cannot explain why it needs your data, do not give it. The FTC’s consumer resources are helpful for recognizing scams and high risk asks before you click (FTC, 2024).

Practical weekly routine:

Monday five minute check. Review recent sign ins and alerts on your email and bank.

Midweek tidy. Clear downloads and old screenshots that contain personal info.

Friday review. In your main AI app, delete drafts you no longer need and empty the trash.

Mini tip: Create a simple one page privacy checklist and keep it near your computer. When you try a new app, run through the list before you sign up.

The takeaway: Use a password manager, two factor authentication, and privacy controls. Share the minimum, prefer on device features when possible, and stick with tools that explain what they collect and how to delete it.

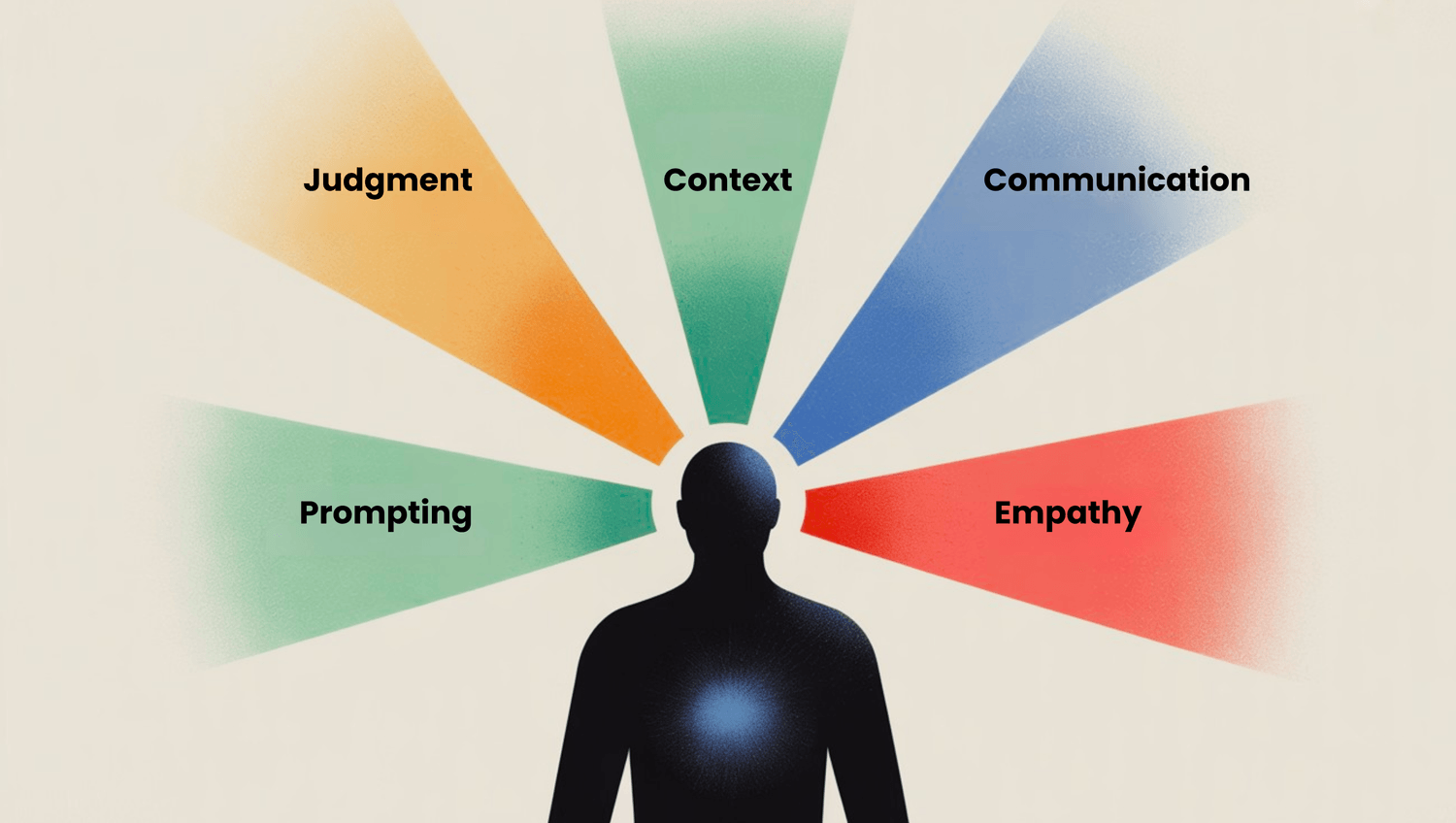

9) Which human skills matter more in the AI era?

Picture a typical Tuesday. You open your inbox, skim a few AI‑drafted summaries, then hop into a meeting where the plan still feels fuzzy. The tool gathered the notes. You still need to decide what matters and who does what next. That is the line between helpful software and human strength.

Here is the short version. AI drafts and sorts. Humans judge, decide, and motivate. The skills that rise in value are the ones that shape goals, add context, and earn trust.

Judgment and decision making. You weigh trade offs, timing, and risk. A report might suggest three options, but only you know the budget, the team’s energy, and the customer promise. Research from the World Economic Forum (2023) lists analytical thinking, problem solving, and systems thinking among the top skills that will stay in demand.

Communication and framing. Clear writing and speaking turn a messy draft into a plan people can follow. You explain why the work matters, how it helps the customer, and what success looks like. The OECD highlights communication and collaboration as core skills that complement digital tools across jobs.

Context and domain knowledge. You know the rules, the edge cases, and the culture of your field. That context sets constraints for the tool. It also helps you spot when the output looks polished but wrong. The MIT Sloan Management Review describes this as complementarity. People provide direction and guardrails, AI provides speed.

Empathy and trust building. Projects move when people feel heard. You coach, negotiate, and defuse tension. A model can suggest wording, but it cannot look a colleague in the eye or read a room. Your tone and timing still carry the day.

Prompting as a thinking skill. Good prompts mirror good thinking. You set a clear goal, include constraints, add examples, and ask for a specific format. Then you review and refine. This is not about magic words. It is about structured requests that map to the result you want.

You might be wondering, “Do I need to learn to code?” Not necessarily. For many roles, basic data literacy is enough. Know how to ask for a table, read a chart, and check a calculation. If you enjoy tinkering, simple scripts can help. If not, focus on clarity, sequencing, and review.

Try a quick practice loop:

Write your goal in one sentence.

List any limits, like time or budget.

Provide a short example of the style or format you want.

Ask for the output in a table or checklist.

Review, then ask for one improvement.

It is normal to feel unsure at first. Start with low stakes tasks and build a small library of prompts that work for you.

Mini tip: When you want higher quality, ask, “What is missing or unclear in my request?” Then fix that and try again.

The takeaway: AI speeds up drafting and sorting. Your value grows in judgment, communication, context, empathy, and clear prompting that turns raw output into sound decisions.

10) Quick start: how can you try AI safely this week?

Let’s keep this simple and confidence building. The goal is to try three small tasks, keep your data safe, and save a little time. You do not need special software. Any reputable AI assistant will work for this starter plan.

Step 1. Pick one everyday task. Choose something low risk and useful. Good options are email cleanup, trip planning, or a simple budget. If a task touches money or health, plan to verify results with primary sources. The U.S. National Institute of Standards and Technology reminds users to keep human oversight for important decisions (NIST, 2023).

Step 2. Write a clear prompt. Include the goal, audience, and constraints. Add one short example of what “good” looks like. Clarity cuts errors and guesswork. The OECD echoes this idea by stressing transparency and user control.

Step 3. Ask for steps, not magic. Say, “Give me a step by step plan and highlight any assumptions.” Structure makes mistakes easier to catch and correct.

Step 4. Verify the parts that matter. When facts or numbers appear, ask for the source or use a calculator tool. The U.S. Federal Trade Commission suggests a simple habit: click through sources and be cautious with money related claims (FTC, 2024).

Step 5. Save what works. If the result is helpful, save the prompt in a notes app. Build a tiny library you can reuse next time.

Ready to try? Copy these example prompts

Email tidy up

“Summarize the long email below in three bullets for a busy reader. Then draft a polite two sentence reply that confirms the date and asks one clarifying question. Keep the tone friendly and plain.”

Travel planning

“I will travel to Vienna for two days in October. Create a morning to evening plan with indoor options if it rains. Include estimated transit time between stops. List the official links you used so I can verify hours.”

Budget helper

“Turn this grocery receipt into a table with item, category, price, and a total. If any item is unclear, list it in a separate ‘check later’ row. Then calculate the weekly average if I repeat this shop four times this month.”

Red flags checklist before you trust the output

No sources for facts that change, like prices or opening hours.

Numbers without a calculator or clear method.

Confident claims in health, legal, or investment topics.

Pressure to upload ID documents without a clear reason. The FTC explains how to spot these asks and avoid scams (FTC, 2024).

A simple weekly routine

Monday. Try one new prompt on a small task.

Wednesday. Improve one saved prompt by adding an example.

Friday. Review results, save what worked, delete what you no longer need.

It is normal to feel cautious. Start small, double check key details, and grow your library of prompts at your own pace.

Mini tip: For any task with numbers, add, “Show your steps and the calculator result.” You will spot errors quickly and gain trust in the process.

The takeaway: Keep it small, clear, and verified. One useful task, one clear prompt, one quick check, and you are on your way to safe, confident AI use.

Keep it small, clear, and verified

You do not need to become a tech expert to benefit from AI. Think of it as a helper that drafts, sorts, and suggests. You still set goals, keep context, and make decisions. Start with one small task this week. Give a clear prompt. Ask for sources when facts matter. Save what works and build your own tiny library. That is how confidence grows.

So, your next step? Want a hand getting started? Download my free AI Quick-Start Bundle. It is a step‑by‑step resource designed for beginners who want to use AI wisely, without the overwhelm.

Frequently Asked Questions

1) Is AI the same as automation?

Not exactly. Automation follows fixed rules. AI learns patterns from examples and makes predictions. Many tools mix both so they can handle routine tasks and also adapt when the input varies.

2) Does AI store my data forever?

It depends on the tool. Reputable services explain what they collect, how long they keep it, and how to delete it. Look for clear controls to export and erase data. See guidance from the EDPB (2023) and the ICO (2023).

3) What is a “hallucination” in AI?

It is a fabricated detail. The model predicts likely words rather than truth. Reduce the risk by giving context, asking for sources, and using calculators or web lookups for facts. See the NIST AI RMF (2023) for user guardrails.

4) Do I need to learn coding to use AI well?

No. Clear goals, examples, and review will get you far. Basic data literacy helps. Coding is optional.

5) Is AI safe for banking and payments?

Banks use AI to flag unusual activity. Keep your notifications on, use two factor authentication, and respond quickly to alerts. The FTC (2024) offers practical steps to reduce fraud risk.

6) Can I use AI without sending everything to the cloud?

Often yes. Many phones and laptops can run features on device, such as photo recognition or voice typing. Check the privacy pages for your device maker. See Apple Privacy and Google Safety.

7) How do I choose a trustworthy AI tool?

Prefer tools with a clear privacy policy, data controls, and visible contact details. If a service cannot explain what it collects and why, skip it. The FTC (2024) has checklists for spotting risky services.

Sources:

Google Cloud — What is Artificial Intelligence (n.d.).

IBM — AI vs. Machine Learning vs. Deep Learning vs. Neural Networks (n.d.).

Google Developers — Introduction to Large Language Models (n.d.).

AltexSoft — Language Models and GPT Explained (n.d.).

NIST — AI Risk Management Framework (2023).

OECD — OECD AI Principles (n.d.).

Apple — Machine Learning and Privacy (2023).

Apple Support — Use Apple Watch to Detect a Hard Fall (2024).

Google — AI at Google and Safety and Privacy (2023–2024).

CISA — Secure Our World (2024).

EDPB — Your Data Protection Rights (2023).

ICO — For the Public: Your Data Matters (2023).

FTC — Consumer Advice and Data Spotlights (2024).

World Economic Forum — The Future of Jobs Report (2023).

MIT Sloan Management Review — Human‑AI Collaboration (n.d.).