What Is a Deepfake? A Simple Guide to Protecting Your Family

By Rado

Feeling unsure what’s real when a “video” or a late‑night call pops up? You’re not alone. The good news: you don’t need special software to protect your family. In this guide, you’ll get plain‑English basics, fast red flags anyone can use, and a simple family protocol that stops most scams before money leaves your account.

What is a deepfake, in plain English?

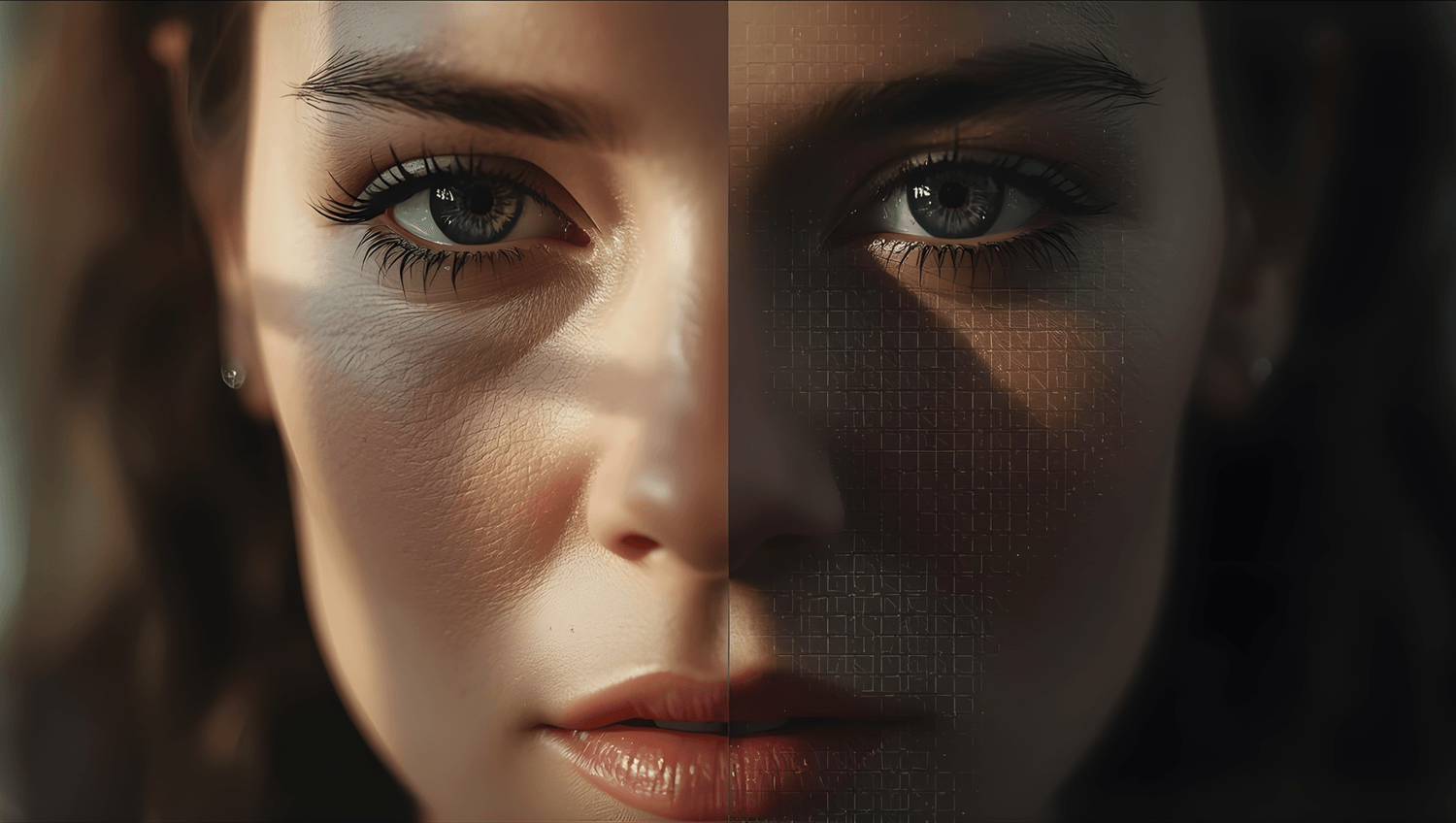

You get a short video that looks like your daughter asking for help. The face looks right. The voice sounds close enough. Your stomach drops. Before you act, here is the simple truth you can use.

A deepfake is a piece of media that a computer learned to imitate. It can be a video, a photo, or a voice clip that looks and sounds like a real person but was generated or heavily edited by AI. Some deepfakes swap faces in a video. Others clone a voice from a few seconds of audio. Newer tools mix both to create very believable calls and video messages. A plain rule helps here: if something tries to rush you, treat it as unverified until you check it.

So what makes deepfakes so convincing now? Modern tools are easier to use and train on huge libraries of voices and faces. Quality has jumped, and people are not great at spotting polished fakes by eye alone. Several security reviews have found that detection accuracy drops as realism improves, which is why simple verification beats gut feeling (see Reality Defender (2025); Security.org (2024)).

There are a few common flavors:

Video face swaps. A scammer pastes one face onto another body and syncs it to speech. Watch for stiff blinking, odd lighting, and lips that slip out of time with the words. Basic tips appear in Norton’s guide (2024).

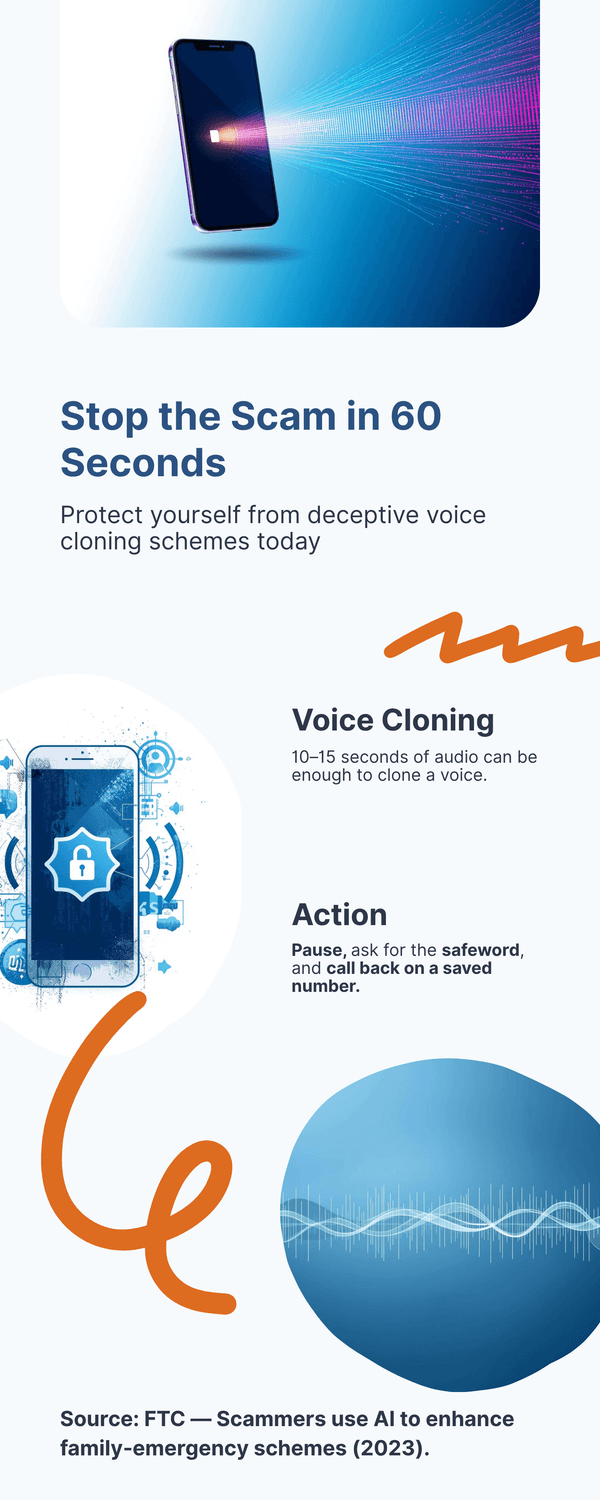

Voice cloning. A short sample from social media or a voicemail can be enough to create a copy that fools friends and family. That is why many agencies now warn about “family emergency” calls that sound real (FTC (2023)).

Image edits. Single photos can be altered to place a person at an event they never attended, or in a context meant to stir emotion or fear.

Mixed media. Video plus cloned voice during a live call or meeting is becoming more common. Case write‑ups show how this has been used to push urgent transfers at work (WEF on a corporate case (2024)).

“Do I need special software to stay safe?” You do not. Your best defense is a short checklist and one family rule. Ask yourself: Does this message rush me? Is the request unusual for this person? Can I pause and call back using a saved number? Those questions slow the moment down so your brain can catch up.

It is normal to feel unsure when a voice sounds familiar. Treat the media as a clue, not the proof. Then verify through a second channel you already trust. When you do that, most deepfakes lose their power.

The takeaway: A deepfake is simply convincing pretend media. Do not rely on your eyes or ears alone. Use a calm pause, a call back on a saved number, and a simple family rule to confirm before you act.

Why deepfakes matter for your family right now

Imagine a Sunday afternoon. You are marinating dinner when your phone buzzes with a panicked voice message from your son. It sounds like him. He says he needs money now. Your heart races. Would you send it before checking? Many smart people would. That is why this matters today, not someday.

Deepfakes and AI‑assisted scams are rising fast. Industry monitoring shows steep year‑over‑year growth in AI‑driven fraud attempts, with notable spikes in phishing and account‑takeover tactics (see Sift (2025)).

Consumer tests also find that as realism improves, humans struggle to tell authentic from fake media at a glance (Security.org (2024)). In short, the tech got good, cheap, and easy to use. That shifts the burden to us to pause, verify, and protect.

Why families? Scammers play on trust and urgency. A cloned voice can lift details from public posts and turn them into a believable emergency. Older adults are frequent targets, and the losses add up quickly. Reports tally billions lost to fraud among people 60+ in the United States alone (NCOA (2024)). At work, the risks spill into home life. A well‑timed video meeting with a faked executive face has already pushed staff to approve large transfers, showing how convincing mixed audio‑video can be (World Economic Forum (2024)).

You might be wondering, “Is this just rare headline stuff?” It is more common than it seems because many attempts never get reported. Security teams warn about family‑emergency voice spoofs, bank and tech‑support imposters, and romance‑investment blends that build trust over weeks (FTC (2023)). Even a 10‑second voice clip scraped from a public video can be enough to clone a tone that convinces a grandparent (McAfee (2023)).

So what does this mean for your household? First, speed is the enemy. Any message that rushes you to move money, share codes, or keep a secret deserves a full stop. Second, habits beat software. A private family safeword, call‑back on saved numbers, and a 30‑minute money freeze window prevent most damage. Third, a few settings make you harder to target: limit who can see your posts, trim public voice samples, and turn on alerts for new payees and large transfers.

It is normal to feel a bit nervous after hearing about these scams. The goal is not to panic. It is to practice a simple plan so your family moves slower than the scam. Ask yourself: Does this sound like the person I know? Is the request typical for them? Can I confirm on a second, trusted channel before I act?

The takeaway: Deepfakes matter because they borrow our loved ones’ voices to force snap decisions. Slow the moment, verify on a saved number, and use a simple family protocol to keep money and trust where they belong.

The most common deepfake scams to watch for

Picture a quiet evening. Your mother gets a call that sounds like you. The voice shakes, asks for secrecy, and pushes for a quick transfer. Would she pause? Many don’t. Here are the patterns that show up again and again.

1) The “grandparent” or family‑emergency call. A cloned voice claims there’s been an accident, an arrest, or a hospital bill. The caller insists on gift cards, wire, or crypto. The fix is simple: hang up and call back using a saved number, or ask for the safeword. The FTC (2023) warns that urgency plus unusual payment is a classic red flag.

2) Bank, government, or tech‑support imposters. The voice sounds official and references real‑looking account details. They may text a code and then ask you to read it back. Real banks and agencies do not ask for passcodes over the phone. The FBI IC3 lists these as top fraud types targeting older adults.

3) Workplace money moves that spill into home life. A video meeting appears with your boss’s face and voice, instructing you to help approve a transfer. It looks convincing because it blends video and audio cloning. A well‑publicized case shows how staff were tricked into a large transfer via a deepfake group call (World Economic Forum (2024)). If you ever get a surprise payment request, use a second channel to verify.

4) Romance‑investment blends. A new online friend sends charming voice notes and occasional video clips to build trust. Later they pitch a “can’t‑miss” investment and guide you to move funds off‑platform. Many victims describe weeks or months of grooming before the ask. The FTC (2023) and AARP (2024) both note the rise of AI‑boosted romance and crypto scams.

5) Account‑takeover bait. You get a call that seems to come from your carrier or a trusted service. The agent sounds real and says there is suspicious activity. They ask for one‑time codes or a recording of your voice to “confirm identity.” Never share codes. Call back using the number on your card or the official app. Cloned voices can be made from short clips taken from social posts (McAfee (2023)).

You might be wondering, “How can I remember all this?” You do not need to. Use one rule: pause, verify, and pay only after confirmation on a saved number. Ask yourself: Is this payment method unusual? Does this person normally ask me to keep secrets? Can I check with a second trusted person before I move money?

It is normal to feel embarrassed if you hesitated or almost sent money. There is no shame in double‑checking. Scammers want speed and silence. Your goal is to slow down and switch channels.

The takeaway: Most deepfake scams use the same trio: urgency, secrecy, and unusual payment. Break the script by pausing, calling back on a saved number, and confirming with someone you trust before you act.

Quick tests: red flags you can spot in seconds

You are making tea when a video pops up. A familiar face asks for a quick favor. Your first instinct is to help. Before you act, run these fast checks. They work in the kitchen, on the bus, or half‑asleep on the couch.

1) The speed test. Does the message push you to act right now or keep a secret? Urgency plus secrecy is the classic setup. If the payment method is gift cards, wire, or crypto, stop. Ask yourself: What happens if I wait 30 minutes? That pause removes the scammer’s advantage.

2) The call‑back test. Do not reply inside the same thread or to the incoming number. Hang up and call back using a saved contact. If it is a company, use the number in the official app or on the back of your card. This simple switch defeats most deepfake voice and video plays (FTC (2023)).

3) The face‑and‑voice scan. Look for tiny mismatches. Do the lips drift out of sync? Are blinks stiff or too perfect? Is the lighting a bit off from frame to frame? Guides show these are common artifacts in fake videos (Norton (2024); SoSafe (2024)). For audio, notice flat tone, odd pacing, and missing background sounds you expect from that person.

4) The link and handle check. Hover or long‑press to preview the real URL before you tap. Does the domain look slightly misspelled? Search the headline with the word “fact‑check,” or paste a suspicious URL into a safe checker. Simple steps like these catch a lot of fakes (EDPS (2023); CheckPhish by Bolster (2025); EasyDMARC URL Checker (2025)).

5) The context quiz. Ask two quick questions: Is this request typical for this person? Did they use a phrase or nickname they normally would? If anything feels off, require your family safeword or move to a second trusted channel.

6) The money friction rule. Before any transfer, add friction. Turn on bank alerts for new payees and large amounts. Keep a short list of safe numbers for your bank and carrier. A little friction now saves hours later.

“What if I am wrong and it really is urgent?” It is normal to worry about that. A real friend or relative will understand a quick verification step. If someone gets angry that you paused to confirm, that is another red flag.

Mini checklist you can screenshot: Pause 30 minutes. Call back on a saved number. Scan for lip‑sync and odd lighting. Hover to preview links. Use the safeword. Add friction before money moves.

The takeaway: In seconds, you can spot the pattern. Slow down, switch channels, and add one layer of friction. Those small steps block most deepfake attempts before they cost you money or peace of mind.

Family safety protocol that stops most scams

It’s late, and a call comes in from a number you don’t recognize. The voice sounds like your niece. She whispers, “Please don’t tell anyone.” Your heart skips. Here’s the protocol that flips the script from panic to control.

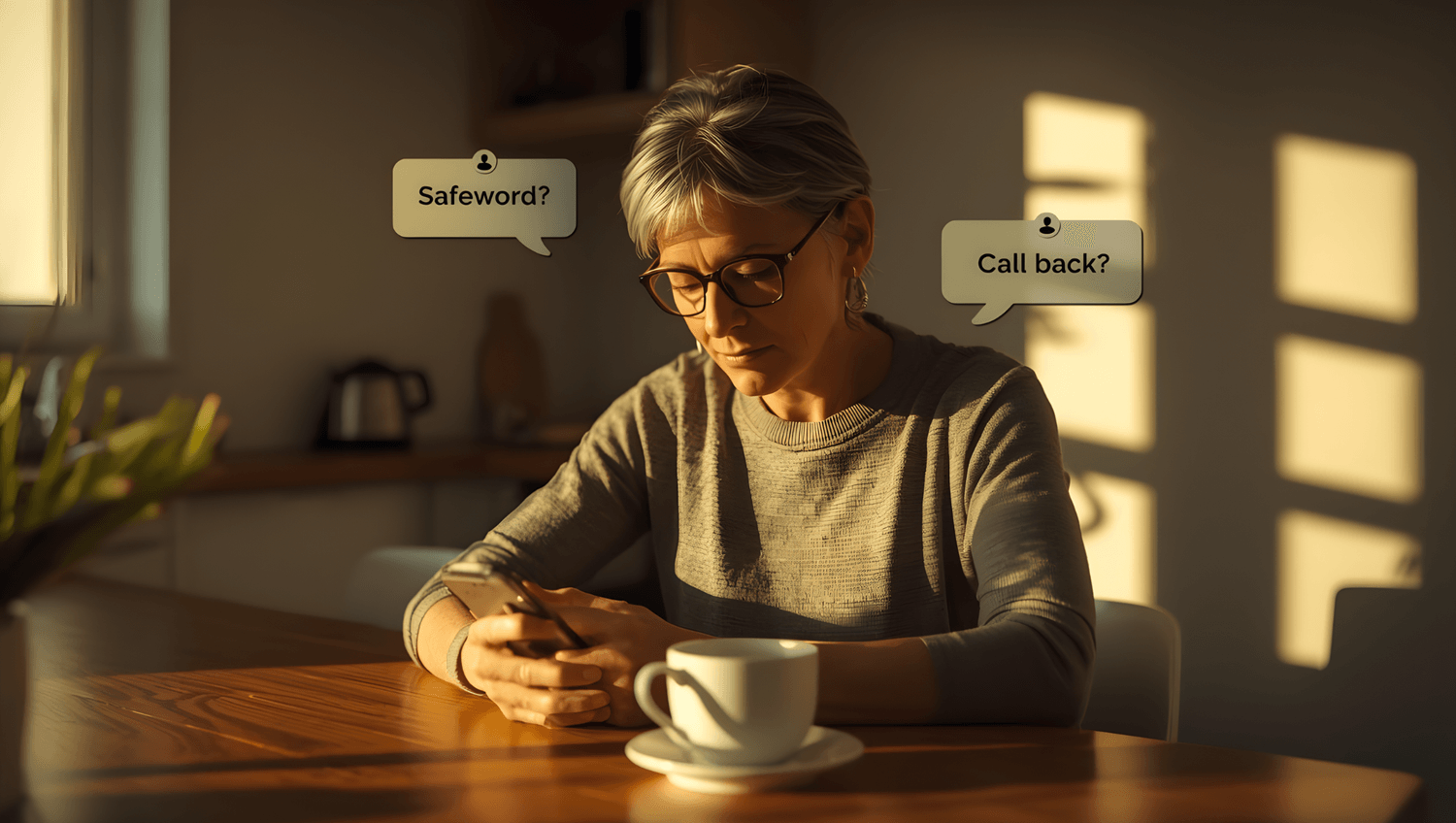

1) Set a private family safeword (3 minutes). Choose a plain word or two‑word phrase that you never post online. Share it only in person or on a video call you start. Do not text or email it. Refresh it every 6 months. Guidance on this simple step appears in practical explainers and law‑enforcement interviews (CBS News citing FBI guidance (2024); StaySafeOnline (2024)).

2) Use out‑of‑band verification. If a request comes by call or message, switch channels before you act. Hang up and call back using a saved contact card. If the claim is about a bank or company, use the number in the official app or on the card itself. This defeats most voice‑clone plays (FTC (2023)).

3) Create a 30–60 minute money freeze window. No transfers, gift cards, crypto, or password sharing until after verification. Put this rule on the fridge. If it is truly urgent, a hospital or police department can wait that long while you call back.

4) Keep a printed contact tree. List 2–3 trusted numbers for each close family member, plus your bank’s fraud line and mobile carrier. Tape it near the phone. If one number fails, you have backups.

5) Run a 2‑minute drill each quarter. Read the script together: “If you get a scary call, ask for the safeword. If they don’t know it, hang up and call me back on my saved number.” Practice once so the real moment feels familiar.

6) Normalize reporting—no shame, no blame. If someone gets tricked or almost tricked, thank them for speaking up. Collect the number, screenshots, and timeline. Then report to IC3 and FTC if money moved, and call your bank’s fraud line.

“Will I slow down help if it’s real?” A genuine friend or official will not object to a short pause while you verify. If they push, that is another red flag.

The takeaway: A safeword, a call‑back on saved numbers, and a short money freeze stop most deepfake scams cold. Practice once when things are calm so your family can move with confidence when it matters.

Phone, email, and social settings to reduce risk in 10 minutes

You do not need an IT background to dial down your risk. Give yourself ten quiet minutes and walk through these switches. They remove the easy openings scammers love.

1) Lock down your voice and visibility.

Set your social profiles to “friends” or “connections” for posts and stories; avoid public voice notes.

Remove old public videos that include clean voice samples. Trim your bio so it does not list travel dates or family details.

Review who can look you up by phone or email.

2) Strengthen your phone account.

Add a carrier account PIN and a port‑out lock (SIM‑swap protection). Check your carrier’s app or support page.

Turn on spam call filtering and voicemail transcription; this helps you spot odd language quickly.

Store your bank’s fraud line and your carrier’s support number in contacts.

3) Add friction to your money moves.

Turn on alerts for new payees, wire transfers, and large transactions.

Require two‑factor authentication (2FA) for banking, email, and password managers. Prefer app‑based codes over SMS.

Use a spending cap or approval rule on accounts you use for family payments.

4) Clean up email in 3 steps.

Enable 2FA and set up recovery options.

Create a simple rule that sends “password reset” and “security alert” emails to a high‑priority folder you can scan quickly.

Use a password manager to generate unique logins; change any reused passwords.

5) Safer links in seconds.

Hover or long‑press to preview real URLs.

If uncertain, paste the link into a checker like CheckPhish (Bolster, 2025) or EasyDMARC URL Checker (2025).

For viral videos or claims, search the headline with the word “fact‑check” and see if trusted outlets address it (EDPS TechSonar (2023)).

6) Update and back up.

Update your phone, browser, and apps; turn on automatic updates.

Back up your device so you can recover quickly if something goes wrong.

“Which two should I do first?” Start with the carrier PIN/port‑out lock and bank transfer alerts. Those two steps block many costly scenarios.

The takeaway: Ten minutes of settings today lowers your exposure for months. Start with carrier PINs and bank alerts, then add 2FA and link‑checking habits for a strong everyday shield.

Tools that help (no jargon)

Your cousin sends a clip that looks like your aunt making a wild claim. You do not want an argument in the family chat. Before you reply, use a few simple tools. No special training. No new accounts in most cases.

1) Quick link and site checks. If a message pushes you to click or pay, pause and scan the link. Paste it into CheckPhish by Bolster (2025) or EasyDMARC URL Checker (2025). These services flag known phishing domains and risky redirects. Ask yourself: Does the domain name match the organization exactly? If it is off by a letter, stop.

2) Reverse image search for look‑again context. Drag the image into a search to see if it appeared before with a different headline. Reverse search helps expose recycled images and out‑of‑context photos. Try Google Images (2025) or Bing Visual Search (2025). You might be wondering, “Will this work on video?” Take a screenshot of a key frame and search that.

3) Deepfake detection services. Automated detectors are improving but they are not perfect. Tools like Reality Defender (2025) analyze audio, image, and video signals for telltale artifacts. Treat results as a nudge, not the final word. Verification by calling back on a saved number still wins.

4) Fact‑check smart search. When a claim sounds explosive, search the headline with the word “fact‑check.” This pulls up work from established teams and can surface debunks quickly. A practical primer comes from EDPS TechSonar (2023).

5) Phone and account safety helpers.

Turn on call filtering and voicemail transcription in your phone settings or carrier app so you can scan messages without engaging.

Set breach alerts for your email on Have I Been Pwned (2025). If you get a hit, change the password and enable two‑factor authentication.

6) Work context? Use built‑in checks. If a surprising request arrives at work, verify it inside your official tools. Use your company’s internal directory or chat to confirm the person before acting. Real companies welcome that pause. Case studies on video‑meeting fraud show why a second channel matters (World Economic Forum (2024)).

It is normal to feel unsure about which tool to open first. Start with the path of least effort: link checker, reverse image, then a quick fact‑check search. Ask yourself: Do I need to act at all right now? Can I call back on a saved number and remove the guesswork?

The takeaway: Tools help, but habits protect. Use a link checker and a quick reverse image search to spot obvious fakes, then confirm through a trusted channel. Let the tools inform you, and let your verification rule make the decision.

What to do if you think you’ve been targeted or scammed

First, take a breath. Feeling shaken is normal. Your goal now is to slow things down, stop any money movement, and gather what you need to recover.

1) Stop transfers and secure your accounts.

If you started a payment, call your bank’s fraud line right away and ask about reversal or hold options.

Freeze or lower limits on cards and accounts you suspect.

Change passwords on email and banking—start with email, then financial apps. Turn on two‑factor authentication if it is not already on.

2) Save evidence while it is fresh.

Keep call logs (screenshots), voicemails, texts, chat threads, transaction confirmations, and any files.

Write a short timeline: when it started, what was said, amounts requested, where links pointed.

3) Report promptly.

If money moved or sensitive data was shared, file reports with IC3 (internet crime) and the FTC (US). These reports help banks and investigators connect the dots.

Also report to your local police if funds were lost; ask for a case number for your bank.

If the scam used a specific platform (e.g., WhatsApp, Facebook, your mobile carrier), report through their in‑app tools so they can flag the account.

4) Warn your circle.

Tell close family and any group chats where the contact happened. Share the timeline and the “do not engage” note so others do not get pulled in.

If a work contact was spoofed, alert your manager and IT so they can notify others.

5) Get support.

Scams can feel isolating. Reach out to the AARP Fraud Watch Helpline for practical guidance and emotional support for you or an older parent.

“What if I feel embarrassed?” That feeling is common. The fastest recoveries happen when people speak up early. You are doing the right thing by acting now.

The takeaway: Secure accounts, save evidence, and report quickly to IC3/FTC and your bank. A short timeline plus screenshots speeds up recovery—and helps protect others.

Talk with older parents and teens without panic

Saturday morning, you are making coffee while your mother scrolls on her tablet and your teen edits a video for school. You want them safe without turning breakfast into a lecture. Here is a calm way to do it.

Start with reassurance, then a simple rule. Try: “You do not have to memorize tech words to stay safe. If a scary message or urgent call comes in, we do two things: ask for our safeword and call back using a saved number.” Keep it short. People remember rules they say out loud. Have each person repeat the line once.

Use real‑life examples they relate to. For older parents, mention the classic “emergency” call with a gift‑card request. For teens, talk about fake celebrity clips, AI‑edited classmate photos, or a friend’s account that suddenly asks for money. Both groups benefit from one habit: pause and verify on a second channel. Practical scripts and tip sheets are available from AARP (2024) and Common Sense (2024).

Make it a 2‑minute drill, not a lecture. Set a timer. Say: “If you ever get a scary message from me, ask for the safeword. If I do not know it, hang up and call my saved number.” Then swap roles and practice once with each person. It is normal to laugh—it means the rule will stick.

Give them the “three-question check.”

Does this push me to act right now or keep a secret?

Is the request unusual for this person?

Can I call back on a saved number or confirm with a second person?

If any answer worries you, stop and verify.

Set teen‑specific guardrails without drama.

Turn on private accounts and limit who can message.

Discuss not sharing clean voice clips publicly and what to do if someone posts a fake.

If a risky clip appears, save evidence and tell a trusted adult. School policies and platform tools can help remove manipulated content (StopBullying.gov, 2025; Meta Safety Center, 2025).

Address pride and embarrassment with empathy. You might say, “It is normal to feel silly for double‑checking. I would rather have you call me twice than send money once.” Remove shame early so people report attempts. That early warning protects everyone.

“What if Dad refuses new rules?” Meet him halfway. Post the safeword and the callback numbers by the landline. Add bank alert texts to a phone he already uses. Gentle nudges beat arguments.

Conversation starters you can borrow:

“If you got an urgent call from me tonight, what word would you ask for?”

“Whose number would you call if my phone was ‘lost’ and someone texted from it?”

“If a friend’s account DMs you for money, what is our next step?”

The takeaway: Keep it calm and concrete. Practice a 2‑minute safeword drill, post the callback numbers, and agree on the three‑question check. Confidence grows when the plan is simple and practiced together.

Build habits to stay ahead (without stress)

Think of safety like brushing your teeth. Two minutes, most days, keeps problems small. You do not need new apps or a long checklist. A few steady habits, repeated, make you hard to trick.

Make a tiny monthly check. Pick the first Sunday of the month. In ten minutes, review privacy settings on your main social accounts, skim bank alerts, and clear any old public clips that have clean voice samples. Ask yourself: What did I share this month that a stranger could reuse? Do I need to trim anything?

Refresh the safeword twice a year. Put a recurring 6‑month reminder on your phone. During the check‑in, read the family script out loud and update the printed contact tree. Law‑enforcement and consumer groups keep urging families to use callback verification and private words because it works in the messy real world (FTC, 2023; AARP, 2024).

Keep software current without thinking about it. Turn on automatic updates for your phone, browser, and key apps. Updates fix holes that scammers like to combine with social tricks. If you have not updated in a while, do it today, then let it run by itself.

Use a password manager and 2FA everywhere it matters. A manager creates unique passwords without you memorizing them. Add app‑based two‑factor codes for email, banking, and your password manager itself. This habit blocks many account‑takeover plays that often sit next to deepfake contact attempts.

Create a simple “fraud kit.” Keep three things handy: your bank’s fraud number, your carrier’s support number, and your family contact tree. Tape a copy by the landline or fridge. Save a digital copy in a shared note. When pressure hits, you will not be hunting for details.

Bookmark trusted sources. Follow a short list rather than chasing every headline. Good starting points: FBI IC3 (2025) for alerts and reporting, AARP Fraud Watch (2025) for plain‑language guidance, and your bank’s security page for product‑specific tips. Industry monitoring also tracks scam trends you can skim quarterly (Sift, 2025).

Practice a tiny drill each quarter. Two minutes is enough. One person plays the caller. The other asks for the safeword, hangs up, and calls back on a saved number. Laugh, then check the clock. You will feel the calm that practice creates.

“Will this take too much time?” Most of it runs on reminders. Set and forget. When a real‑looking clip or call arrives, your family will already know the next step.

Habit calendar you can copy:

First Sunday monthly: privacy sweep, alert review, voice‑sample cleanup.

Every 6 months: safeword refresh, print a new contact tree.

Quarterly: 2‑minute drill, update phone and app settings.

The takeaway: Small, repeatable habits beat long checklists. Automate updates, use a manager and 2FA, refresh the safeword, and run a tiny drill. Confidence comes from practice, not perfection.

To conclude...

You do not need to be a tech expert to protect your family from deepfakes. A short pause, a call back on a saved number, and a private safeword beat most scams. Add two quick settings today—carrier PIN/port‑out lock and bank transfer alerts—and you have a strong everyday shield. Keep the plan simple, practice once, and move at your pace. Confidence grows with small habits.

So, your next step? Want a hand getting started? Download my free AI Quick-Start Bundle with the Family Safeword Protocol to set a safeword, the Family’s quick‑check protocol for fake news crisis and the Quick 60-Second Checks for Fake News.

Frequently Asked Questions

Q1) How can I tell a deepfake video from a real one?

Start with the speed test. If a clip tries to rush you to act or keep a secret, stop. Scan for small glitches: lip‑sync drift, stiff blinking, odd lighting. Then verify through a second channel you already trust. Guides from Norton (2024) and SoSafe (2024) list common tells.

Q2) Can scammers clone a voice from a short clip?

Yes. Even a few seconds can be enough to copy tone and phrasing. That is why family‑emergency scams using cloned voices are on the rise, according to FTC (2023) and McAfee (2023). Your fix: a safeword and a call back on a saved number.

Q3) Are older adults targeted more often?

Yes. Losses among people 60+ are high, and scammers lean on trust and urgency. The NCOA (2024) tracks common schemes and how to respond. Keep a printed contact tree and the bank’s fraud number handy.

Q4) Should I use deepfake detector apps?

They can help, but they are not perfect. Services such as Reality Defender (2025) analyze audio, images, and video for artifacts. Treat results as a hint, not proof. Verification on a second, trusted channel still wins.

Q5) What if I already sent money or shared a code?

Call your bank’s fraud line now and ask about reversal or holds. Change passwords, turn on two‑factor authentication, and file reports with IC3 and the FTC. Save screenshots and write a quick timeline. Early reporting improves recovery.

Q6) How do I talk to teens without scaring them?

Keep it short and practical. Practice a 2‑minute drill: “Ask for the safeword. If I do not know it, hang up and call my saved number.” For platform tips, see Common Sense (2024) and StopBullying.gov (2025).

Q7) What quick settings cut the most risk?

Turn on a carrier PIN/port‑out lock, enable bank alerts for new payees and large transfers, and add app‑based 2FA to email and banking. Use link checkers like CheckPhish (2025) and EasyDMARC (2025) when you are unsure.

Sources:

AARP — AI Scams and Voice Cloning: What To Know (2024).

CBS News — FBI urges families to use a safeword to fight AI scams (2024).

EDPS — TechSonar: Fake News Detection (2023).

FBI IC3 — Common Frauds and Elder Fraud Resources (accessed 2025).

FTC — Scammers use AI to enhance family‑emergency schemes (2023).

McAfee — Guide to Deepfake Scams and AI Voice Spoofing (2023).

NCOA — Top Online Scams Targeting Older Adults (2024).

Norton — What Are Deepfakes? (2024).

Reality Defender — Deepfake Myths and Facts (2025).

Sift — Digital Trust & Safety Index: AI Fraud Trends (2025).

SoSafe — How to Spot a Deepfake (2024).

World Economic Forum — Arup Deepfake Fraud Case Video (2024).

CheckPhish by Bolster — Phishing URL Scanner (accessed 2025).

EasyDMARC — Phishing URL Checker (accessed 2025).

Common Sense Media — What Parents Need to Know About Deepfakes (2024).

StopBullying.gov — Cyberbullying: What Is It (2025).